Data Science Best Practices: Ensure Success With These Expert Tips

Data science best practices helps companies make decisions and shape strategies. To succeed, companies need to follow best practices in data science. This article will share important strategies for success, covering best practices, data quality, cleaning data, choosing algorithms, communicating results, reproducibility, and useful tools for data scientists. Implementing data science best practices can enhance project efficiency and outcomes significantly.

Key Best Practices in Data Science

Data science projects mix different skills: math, statistics, programming, and knowledge about specific areas. While technical skills matter, it’s essential to know how to change raw data into useful insights that match business goals. Utilizing various data analysis techniques can improve the process of deriving insights significantly. Using established best practices can really improve the success of data science projects. Remember, applying data science best practices ensures that your findings are trustworthy and actionable.

Grasping the Project Aims and Business Goals

Understanding the project’s aims and business goals is a key first step. It helps make sure that the analysis stays relevant and useful for the organization. A focus on data science best practices enables teams to better align their analytical efforts with strategic initiatives.

- Identify Stakeholders:

Start by talking to stakeholders. Set up interviews or meetings with decision-makers and end-users of the data insights. Ask questions such as:

What challenges are you facing right now?

How do you think data can help solve these problems?

What decisions will change because of this project?

- Define Clear Objectives:

After speaking with stakeholders, establish clear and measurable objectives. Use SMART criteria—specific, measurable, achievable, relevant, and time-bound. For example, if a stakeholder wants to improve customer experience, a specific goal could be to raise the Net Promoter Score (NPS) by 15% in six months. - Align with KPI:

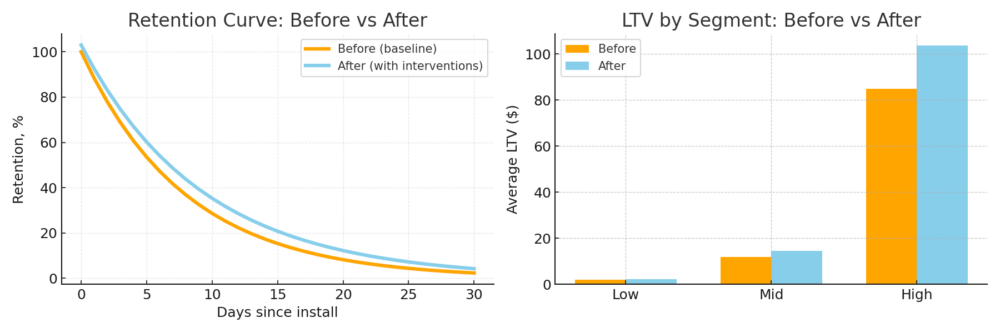

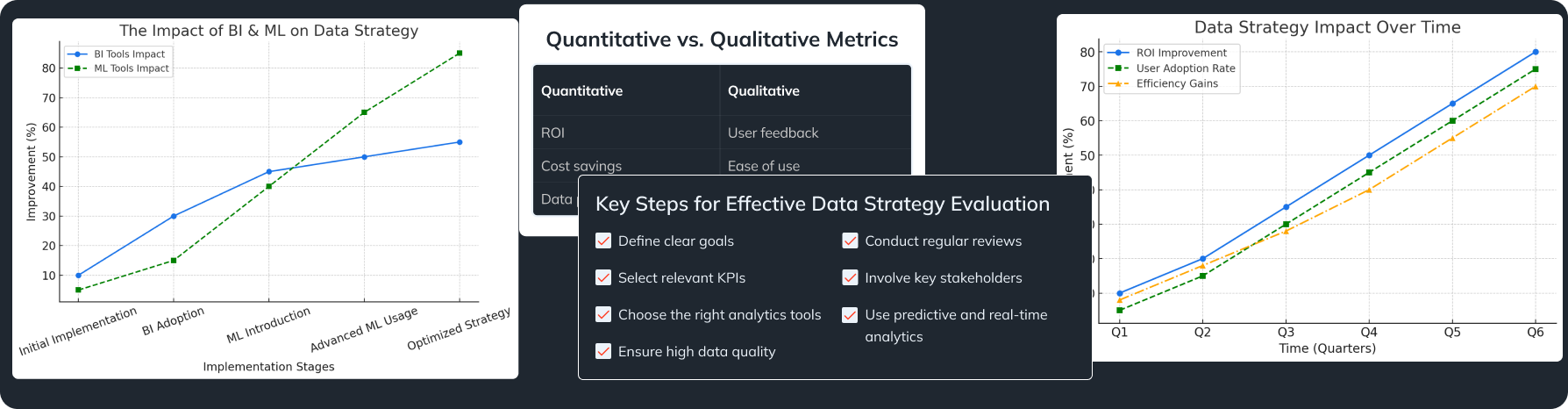

Link the clear objectives to Key Performance Indicators (KPIs). KPIs are benchmarks that help track progress. For example, if the goal is to keep customers longer, relevant KPIs may include churn rate and customer lifetime value (CLV). Aligning these KPIs with data science best practices fosters greater accountability in project execution.

Setting Explicit Success Metrics for Assessment

Setting clear success metrics from the beginning is essential for measuring progress and success in data science projects.

- Choose Relevant Metrics:

Select metrics that reflect business goals in measurable ways. For instance, if the aim is to increase website engagement, relevant metrics could be visitor retention rate or average session duration. - Baseline Measurement:

Collect baseline data for these metrics before starting. Analyze historical data to create a summary report. For example, if the average session duration is 3 minutes before improvements, it will help you compare with data after three months. Establishing baseline metrics is one of the key data science best practices that should never be overlooked. - Continuous Monitoring:

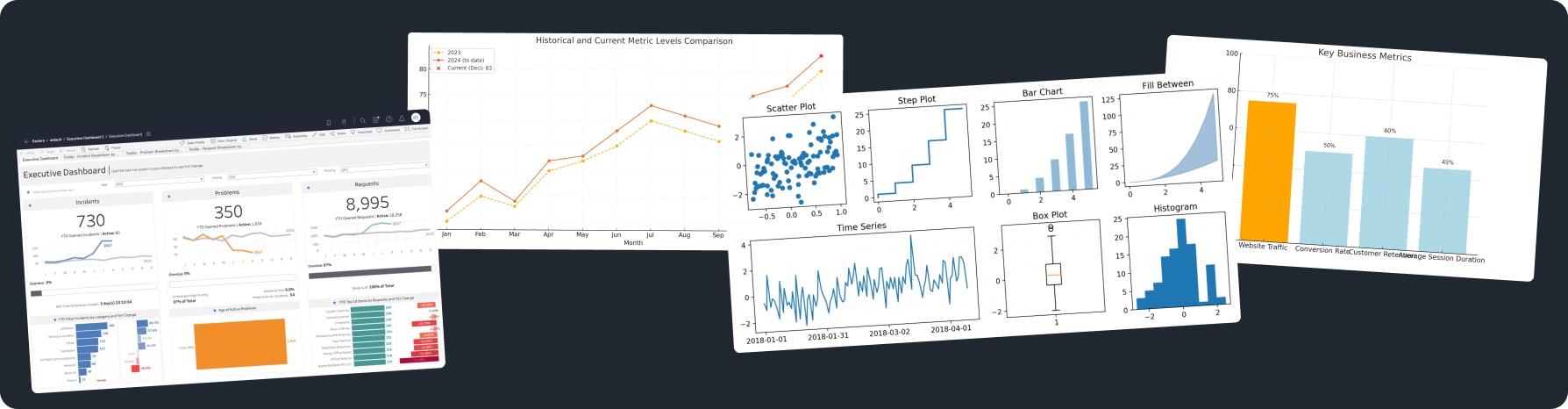

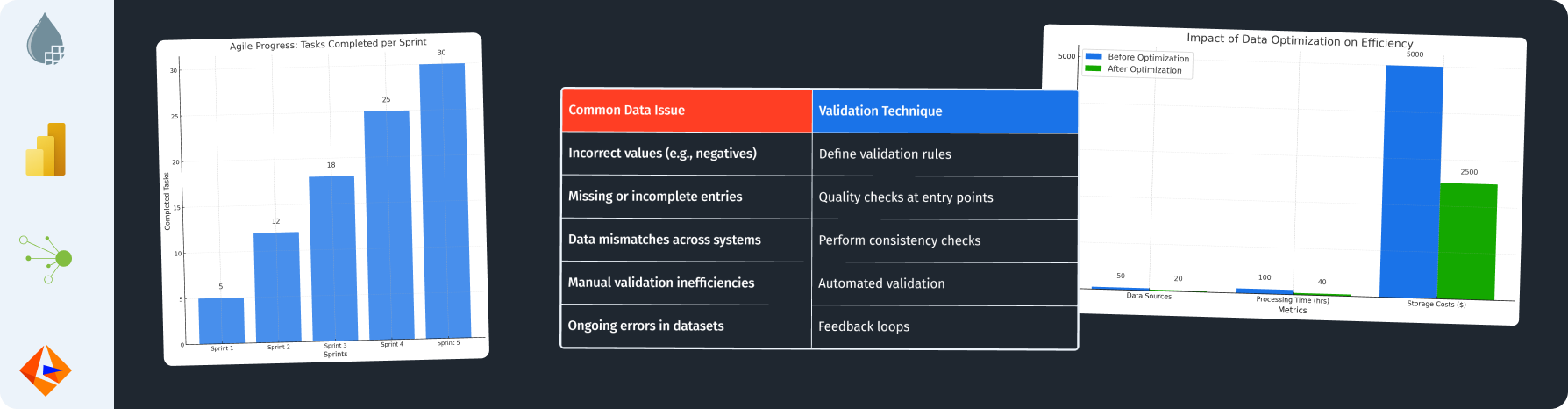

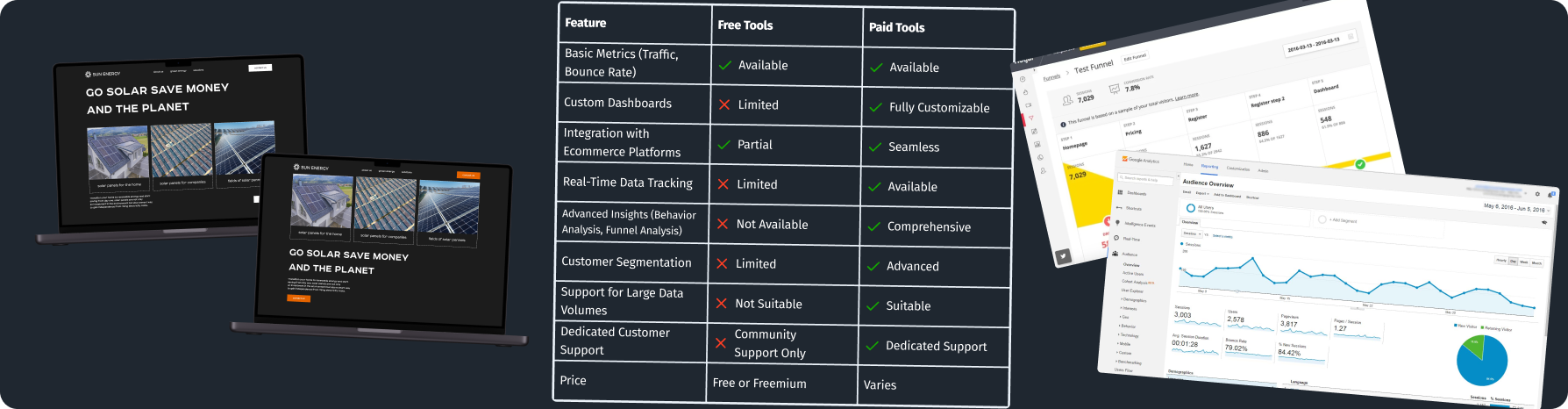

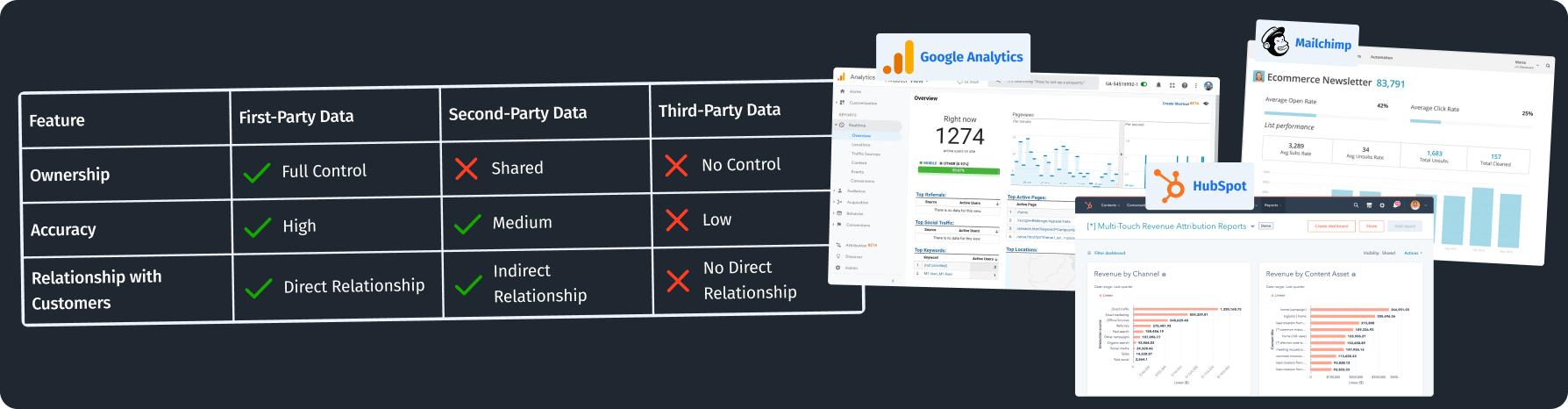

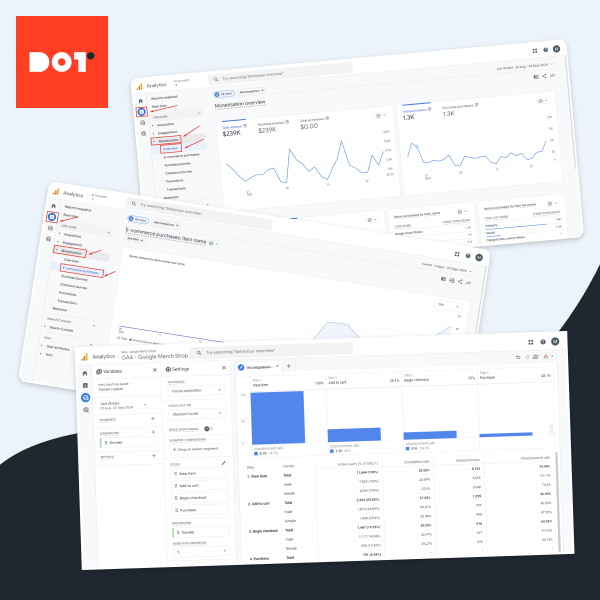

Set up systems for ongoing monitoring of your metrics. Dashboards help visualize changes in real-time. Tools like Tableau or Google Data Studio can show data updates clearly for all stakeholders.

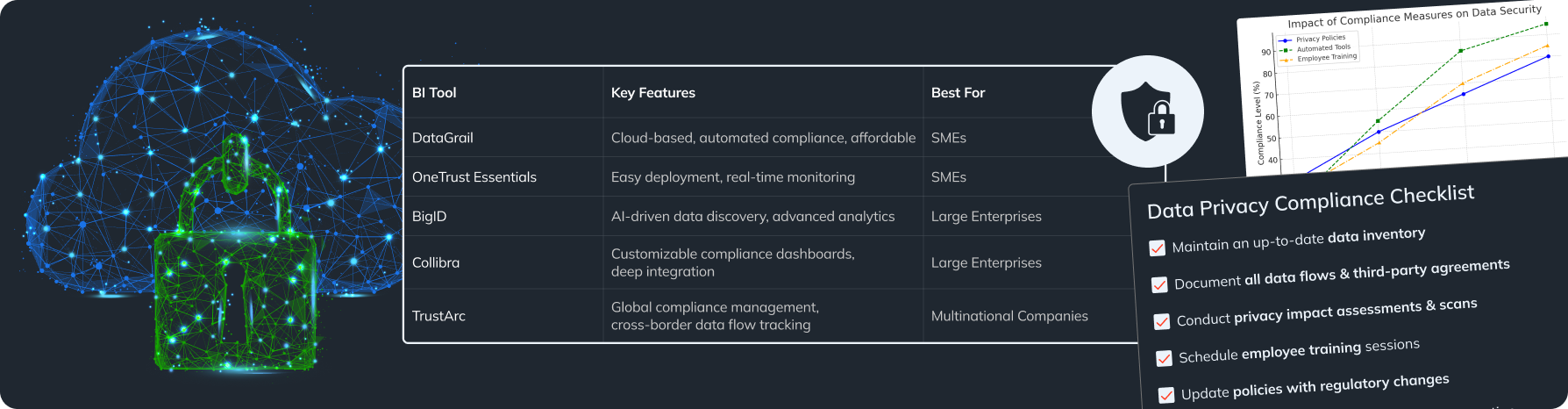

Managing Data Quality and Integrity

Data quality is very important. Poor quality data can lead to wrong conclusions and reduce trust in data insights. Remember, maintaining data quality is essential to uphold data science best practices.

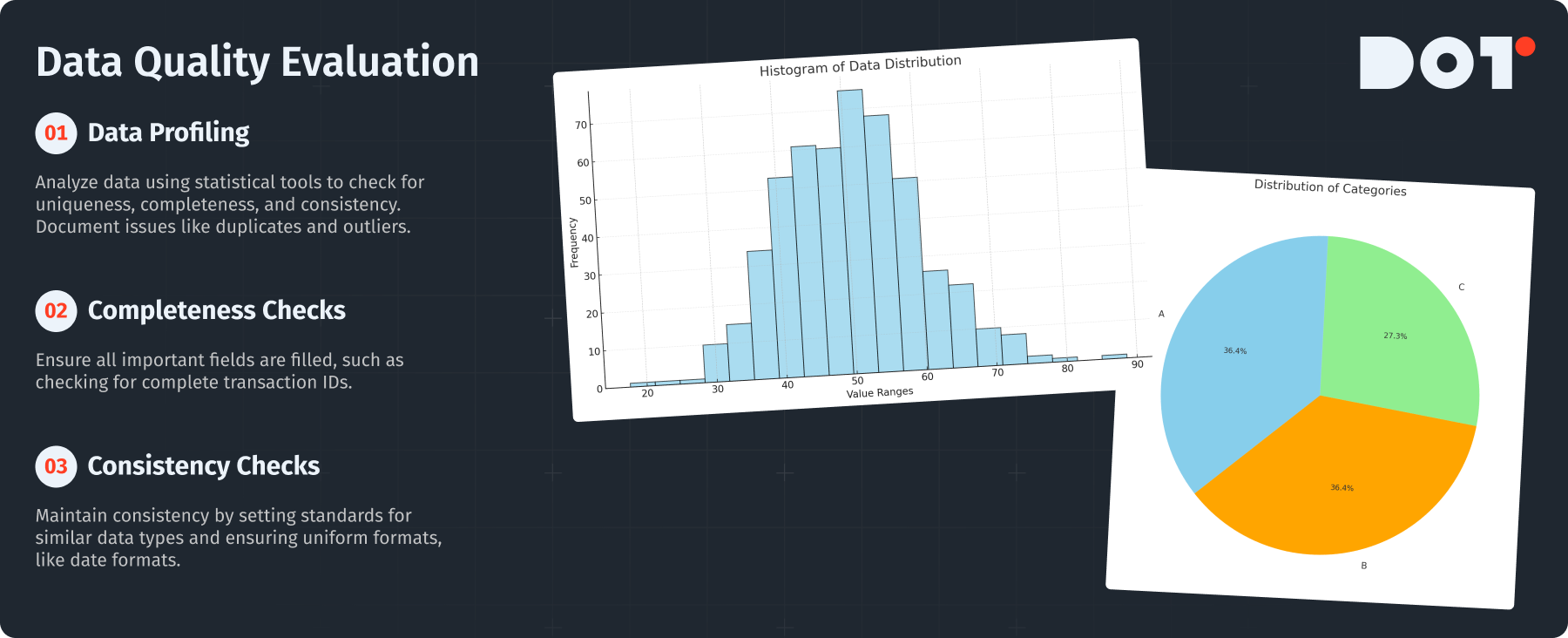

Methods for Evaluating Data Quality

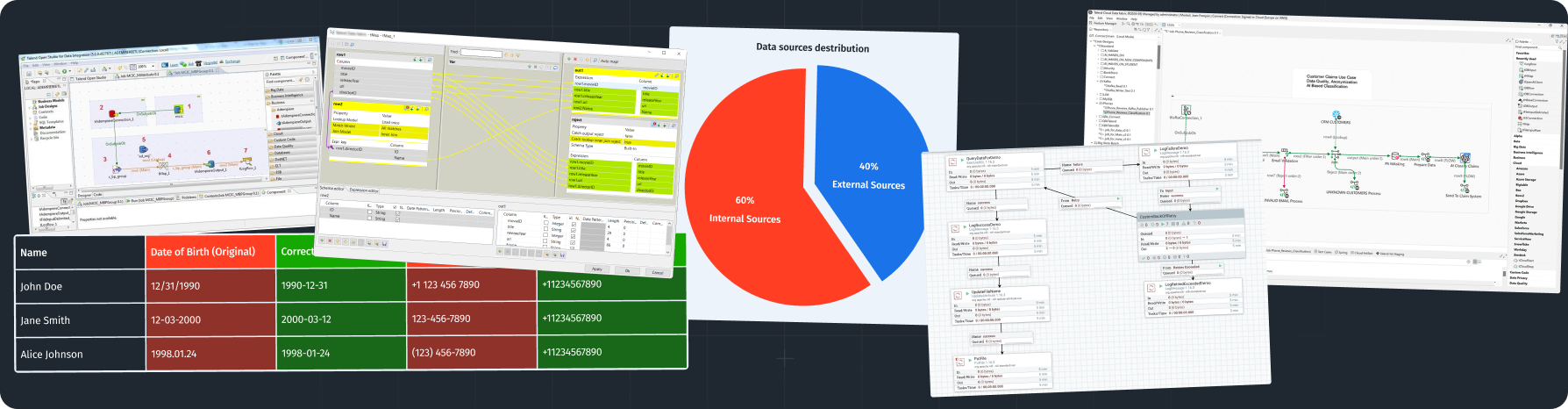

- Data Profiling: Start by profiling data. Use statistical tools to check datasets for completeness, uniqueness, and consistency. Tools like Pandas in Python can help analyze large datasets. Document any issues, like duplicates or outliers.

- Completeness Checks: Make sure all necessary fields are filled. Create a checklist of important data fields. For example, in a customer dataset, check that fields like transaction ID are complete.

- Consistency Checks: Keep consistency in datasets by setting standards for similar data types. If two datasets have different names for the same thing, you must fix that. Ensure uniform formats, such as date formats.

Implementing Verification and Validation Processes

Setting up verification and validation processes helps ensure data integrity.

- Data Verification:

Regularly check incoming data to make sure it meets expected formats and structures. Automated scripts can catch errors. For instance, the age entry should not have negative values. - Validation Rules:

Establish rules for data input. For instance, ensure that date fields only accept proper date formats. You can show users the expected format when entering data. These practices reflect data science best practices that help maintain data accuracy.

Want to ensure the highest standards of data quality? Schedule a free 20-minute consultation with one of our experts.

Data Preprocessing and Cleaning Techniques

Cleaning data is an important step before analysis. Raw data often has missing values, duplicates, and unnecessary information. Emphasizing data science best practices in the cleaning phase can dramatically improve the quality of the analysis. Applying various data preprocessing methods can also enhance your datasets significantly.

Popular Approaches for Addressing Missing Data

Handling missing data properly is key. Simply dropping rows can create bias.

- Imputation: This method substitutes missing values with estimates based on other data. Simple methods include:

- Mean Imputation: Replace missing data with the average of available values.

- Median Imputation: Use the median, which is better for skewed distributions.

- Predictive Modeling: More advanced methods like K-nearest neighbors (KNN) or regression models can predict missing data based on other variables. This is useful when you have lots of data and strong correlations. Implementing data science best practices during this phase ensures a more robust dataset.

Methods for Normalizing and Scaling Datasets

Normalization and scaling help data fit the needs of the algorithms applied.

- Min-Max Scaling:

Transform values between 0 and 1 using: (value – min) / (max – min). This works well for data that is balanced. - Standardization:

Remove the mean and scale the data by standard deviation with: (value – mean) / standard deviation. Use this when outliers are present as it doesn’t limit values to a set range. These normalization techniques are part of the data science best practices.

Have questions about your data cleaning strategies? Connect with a Dot Analytics expert for a free consultation and get answers.

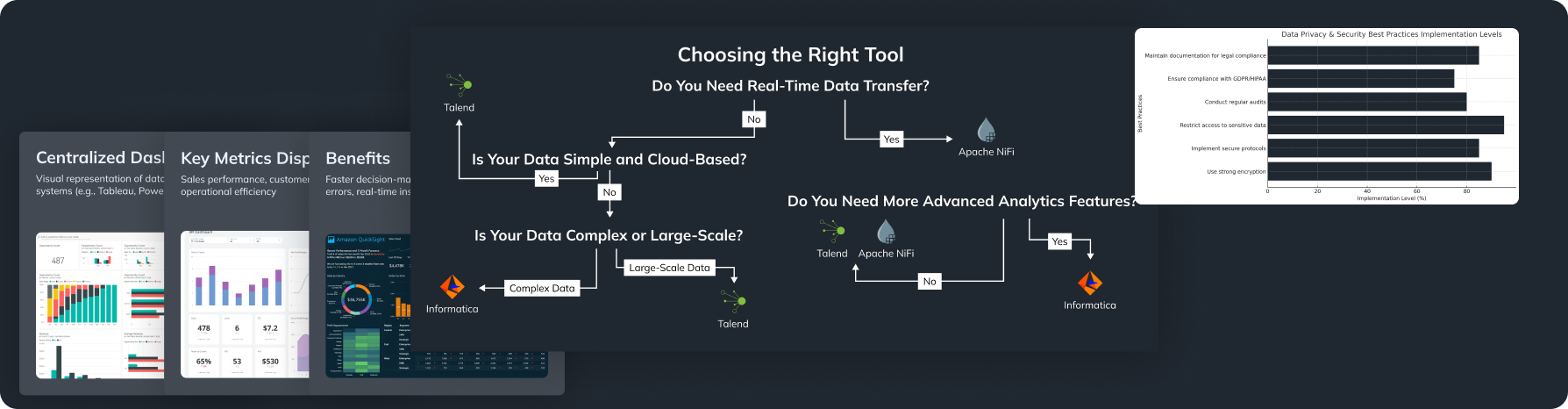

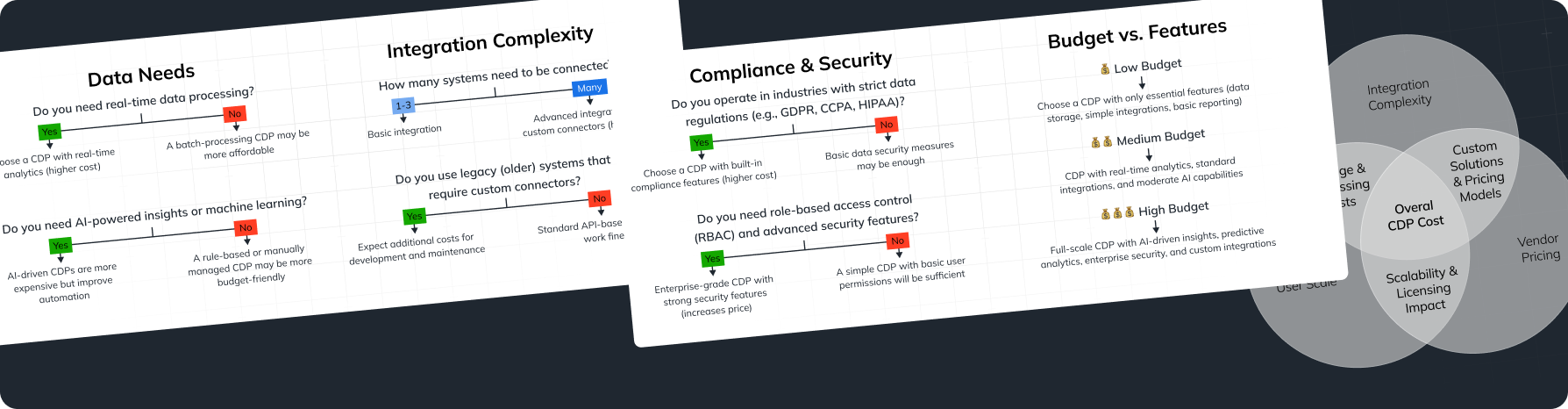

Choosing the Right Algorithms

Choosing the right algorithms is critical for the success of a data science project. There are many algorithms, and selecting the best can be tough. Following data science best practices can streamline this selection process significantly.

Considerations When Selecting Algorithms

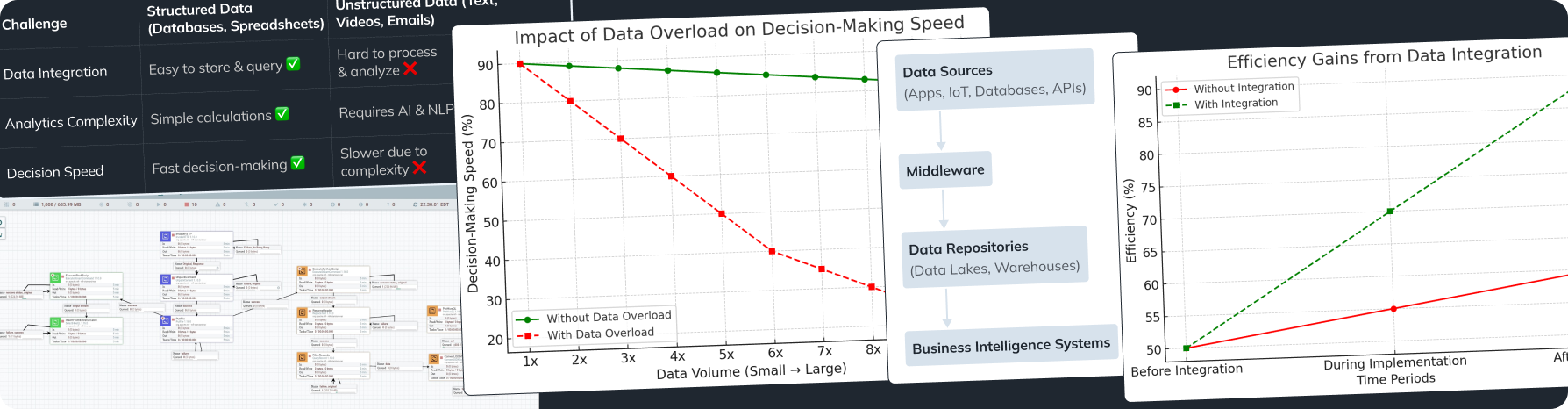

- Nature of Your Data: Know the type of data you have: Is it structured, semi-structured, or unstructured? Data can be numerical, categorical, text, etc., requiring different handling.

- Problem Type: Understand what kind of problem you’re solving. Is it classification (labeling data) or regression (predicting numbers)? Here are some examples:

- For Classification: Use Logistic Regression or Random Forest.

- For Regression: Use Linear Regression or Gradient Boosting.

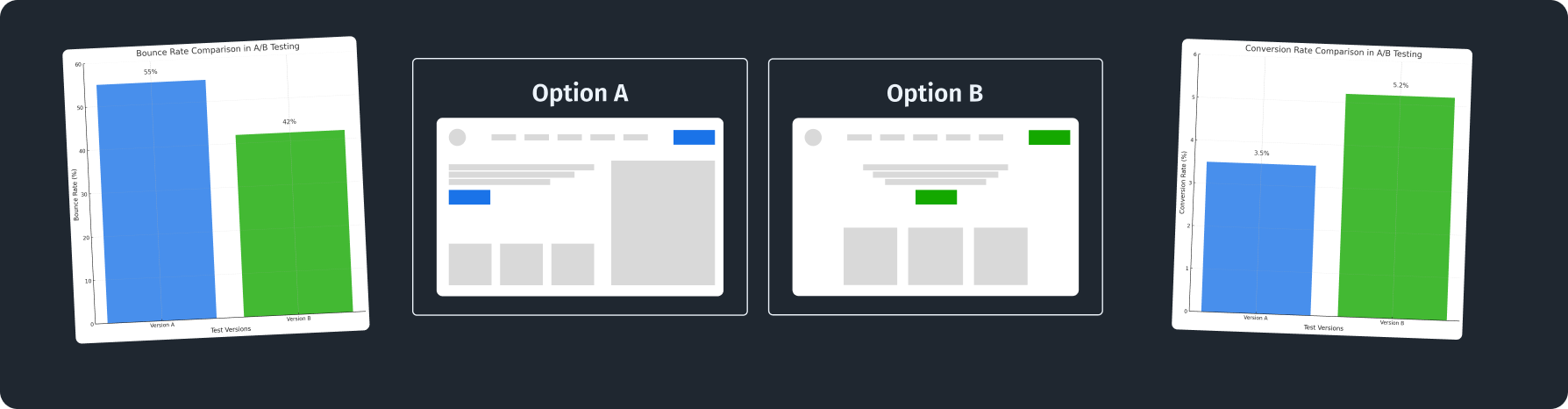

How to Assess and Benchmark Algorithm Effectiveness

- Cross-Validation:

Use methods like k-fold cross-validation. This divides your dataset into k parts. Train your model on k-1 parts and test on the remaining. This prevents overfitting. - Performance Metrics:

Depending on the model, use different performance metrics: model evaluation metrics are crucial for understanding how well your chosen model performs.

- Classification:

Look at accuracy, precision, recall, and F1 score. - Regression:

Use RMSE (Root Mean Square Error) and R-squared. Emphasizing the importance of data science best practices in these assessments can lead to more accurate predictions.

Communicating Data Insights to Stakeholders

After getting insights, it’s important to share them effectively with stakeholders who have different knowledge levels.

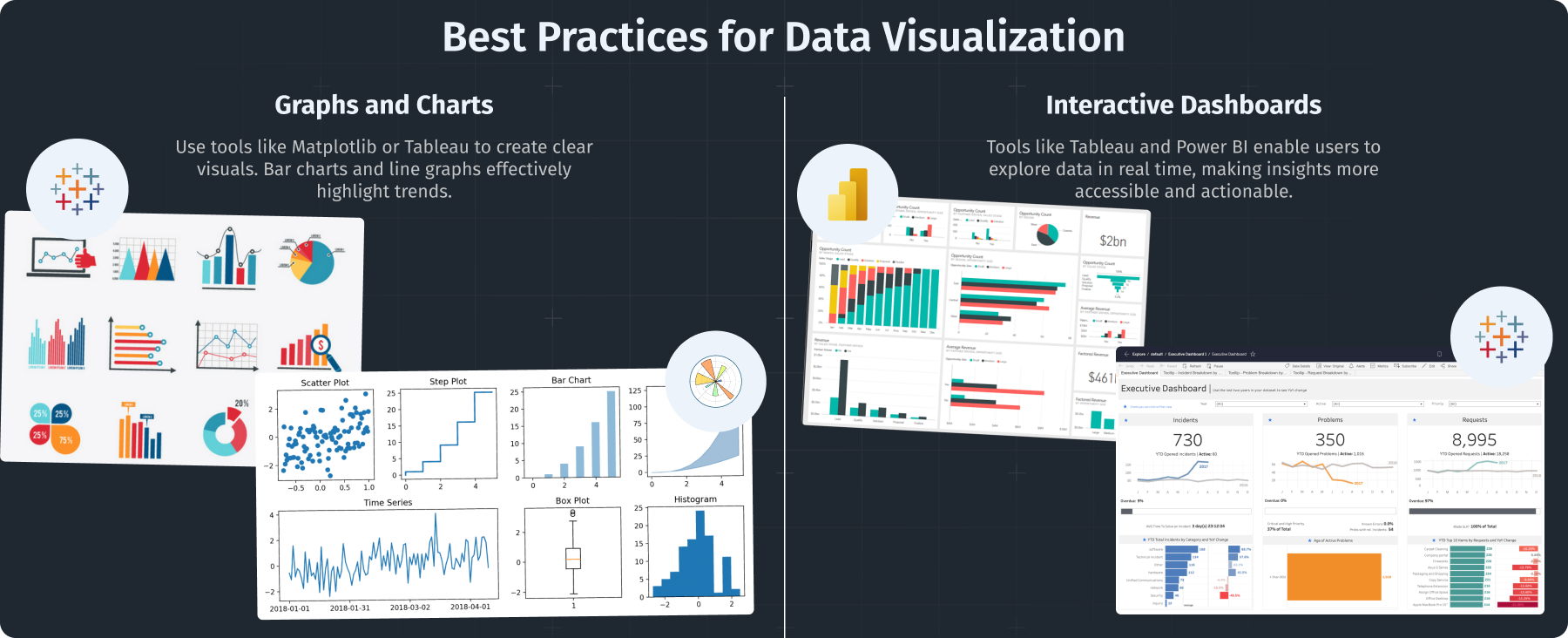

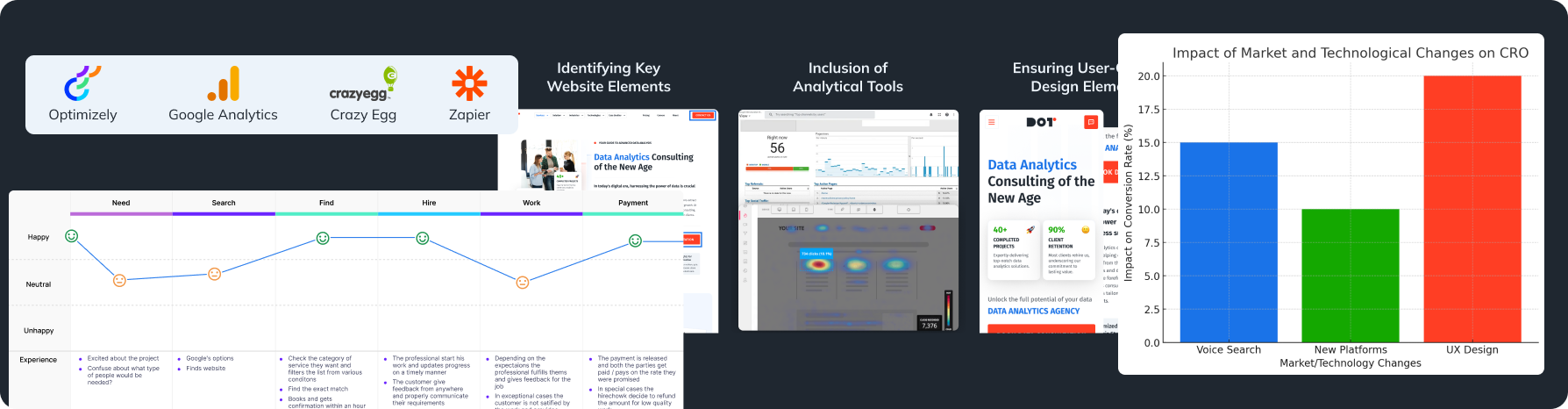

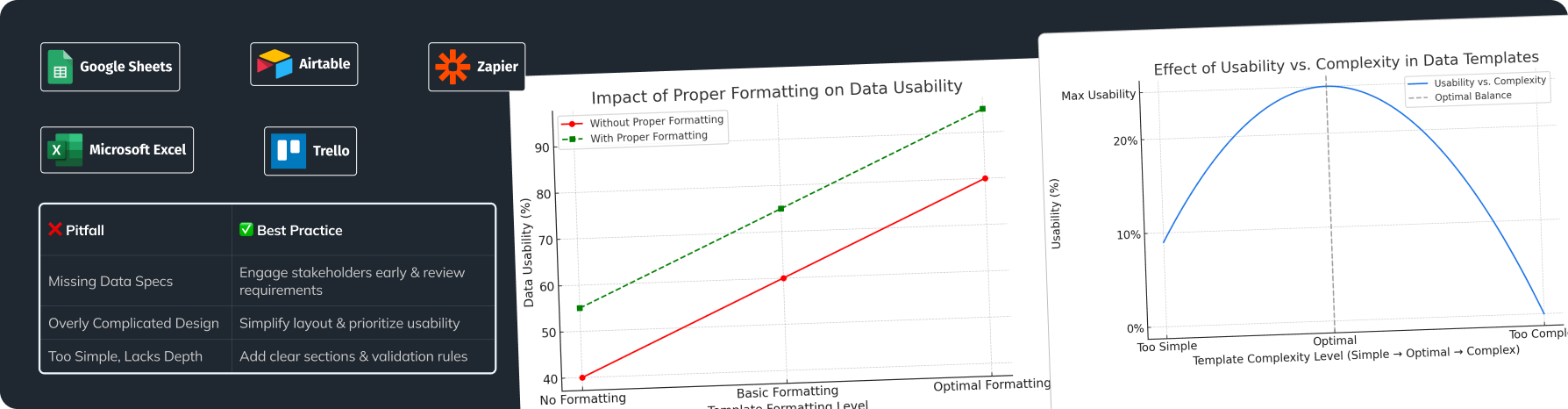

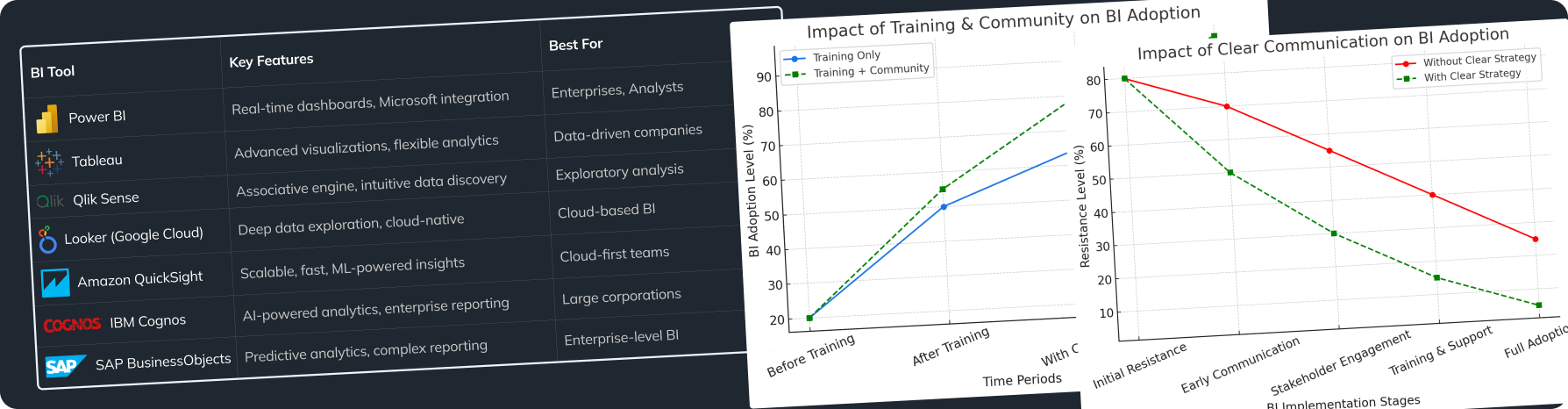

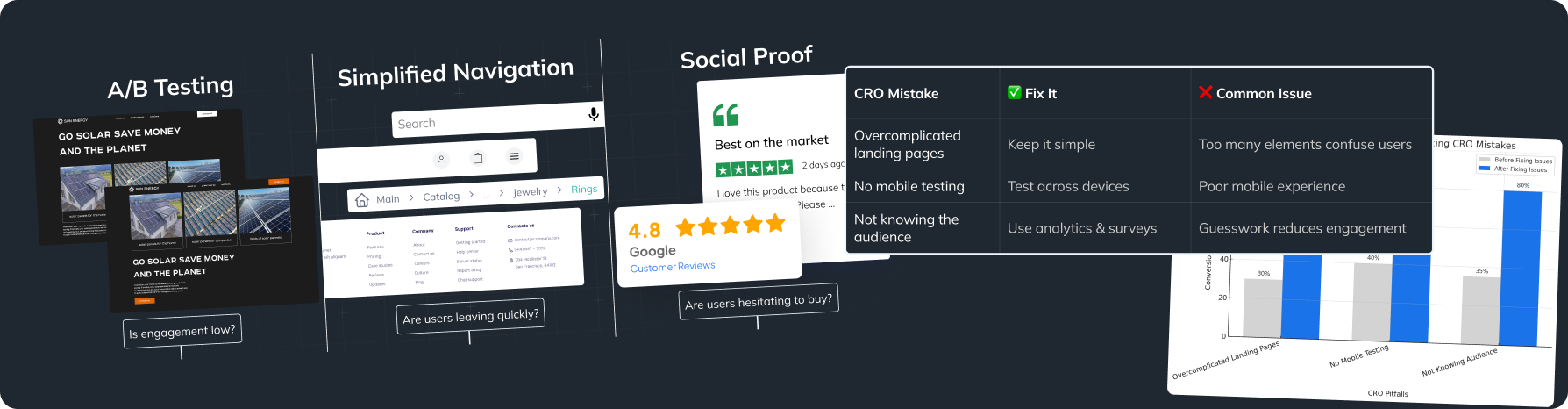

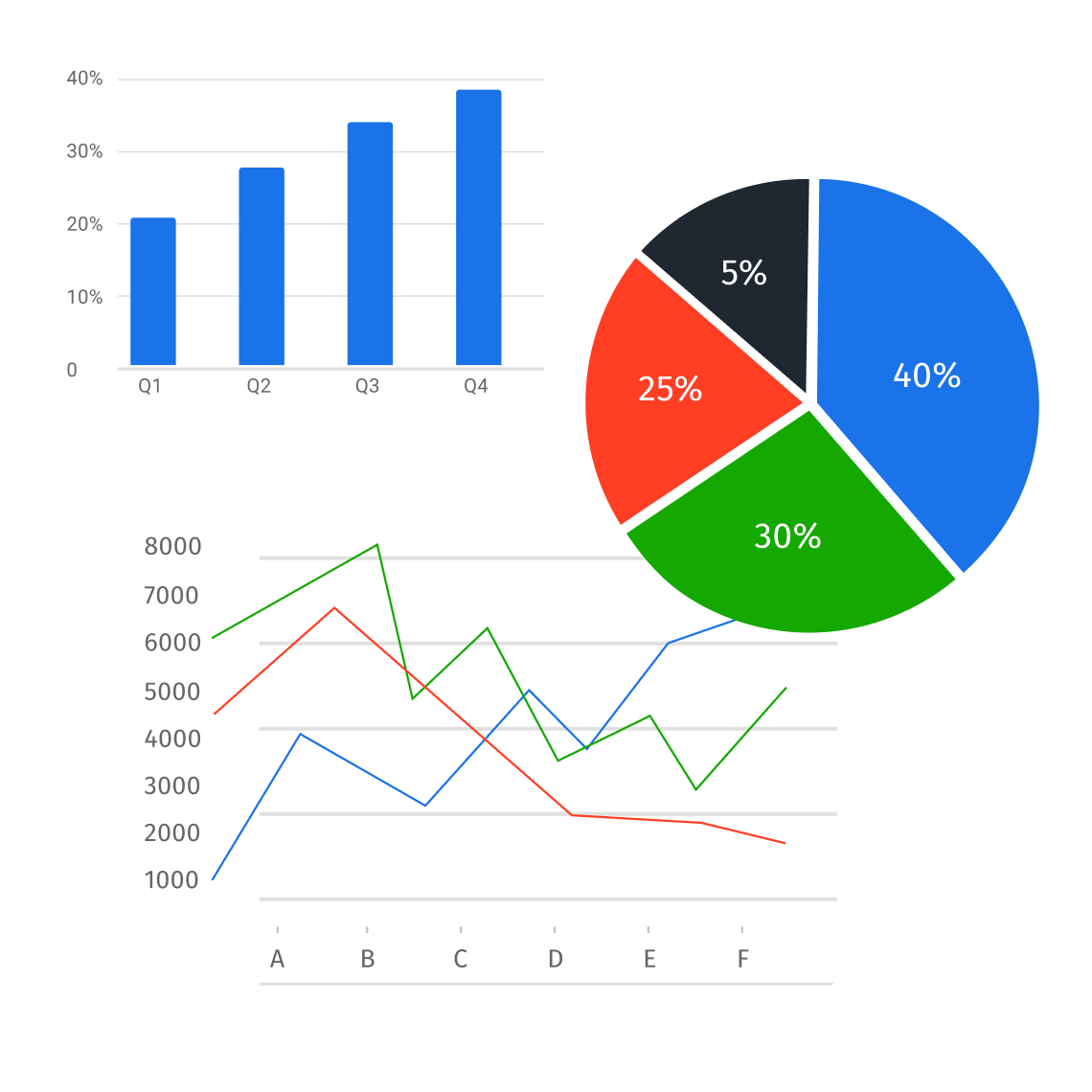

Best Approaches for Data Visualization

- Use Graphs and Charts: Use tools like Matplotlib or Tableau to create clear visuals. Bar charts and line graphs are great for showing trends.

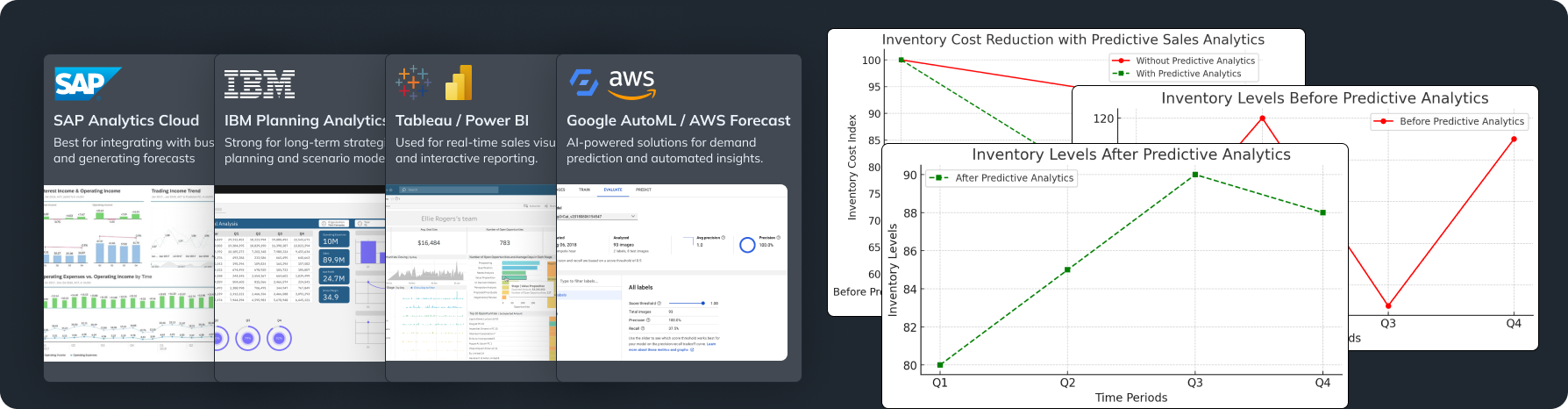

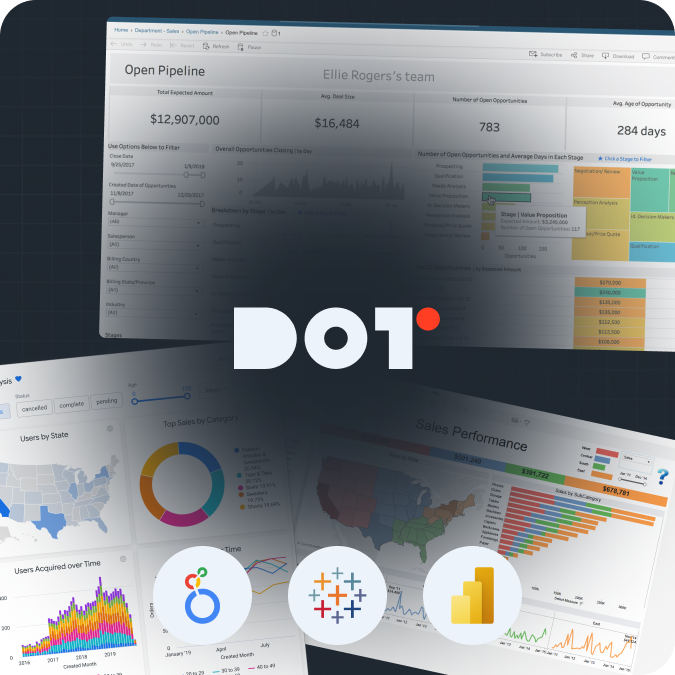

- Dashboards: Create interactive dashboards to allow users to explore data in real-time. Tools like Tableau and Power BI help make insights easier to understand while demonstrating data visualization best practices in action.

Customizing Messages for Various Audience Types

- Technical Audience:

Provide detailed analyses with statistical data and methodologies used for technical groups. This builds credibility. - Non-Technical Audience:

Simplify insights for non-experts, focusing on what actions to take based on the data, and avoiding technical language. Tailoring your message in line with data science best practices can enhance understanding among different audiences.

Ensuring Reproducibility in Data Science Best Practices

Reproducibility is key in data science, ensuring findings can be checked and repeated.

The Value of Version Control and Comprehensive Documentation

- Use Version Control Systems: Use tools like Git to keep track of your code changes. This allows team members to work together easily and revert to previous versions of your work if needed.

- Document Everything: Good documentation is important. Create a README file that includes data sources, algorithm details, and methods used. This helps future data scientists understand your project, ensuring adherence to data science best practices.

Instruments and Practices that Enhance Reproducibility

- Use Docker to create a consistent working environment. This ensures all software and packages are the same across different computers.

- Use virtual environments like conda or Python’s venv to manage any dependencies. Listing all required packages helps others replicate your work, another facet of data science best practices. Proper practices in machine learning model deployment can streamline sharing and application of your models.

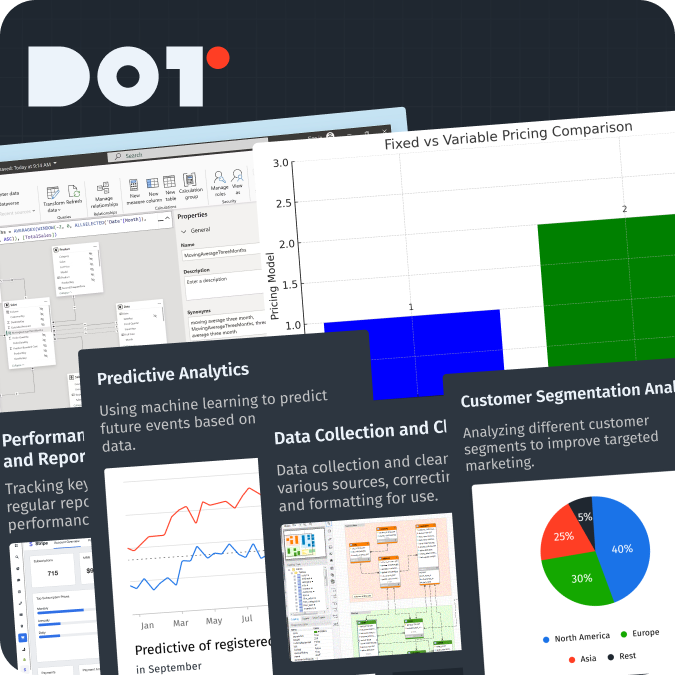

Recommended Tools and Technologies

Using the right tools can help your data science projects be more productive and successful. Incorporating data science best practices when selecting tools can maximize their effectiveness.

Summary of Common Data Science Tools

- Python: A popular programming language for data science. Libraries like Pandas and Scikit-learn help with data manipulation and analysis. When learning data science, consider it among the best programming languages for data science.

- R: Great for statistical analysis and visualizations. The ggplot2 library is well-known for creating detailed charts.

- SQL: Essential for managing relational databases. Knowing SQL makes it easier to access and update data efficiently while adhering to data science best practices.

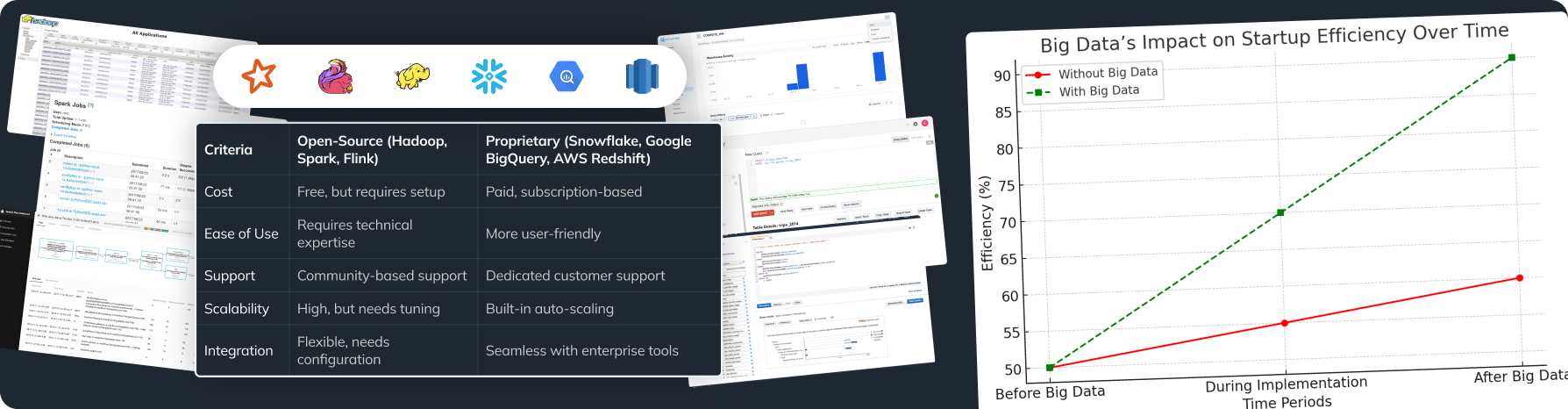

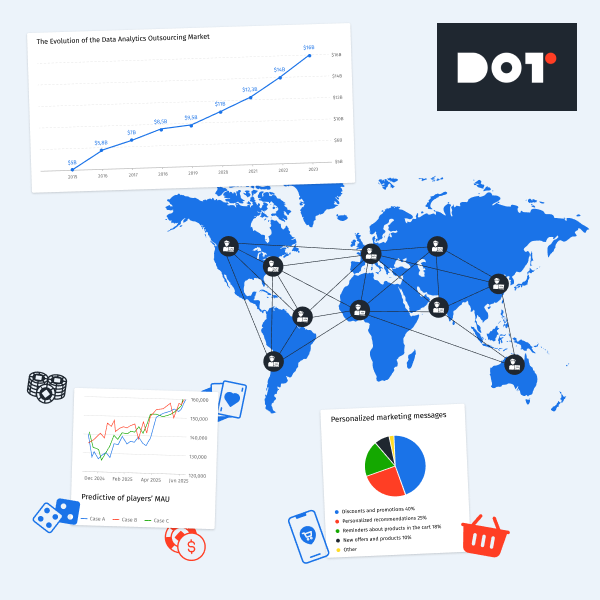

Newest Technologies Impacting Data Science

New technologies are changing the field of data science.

- AutoML:

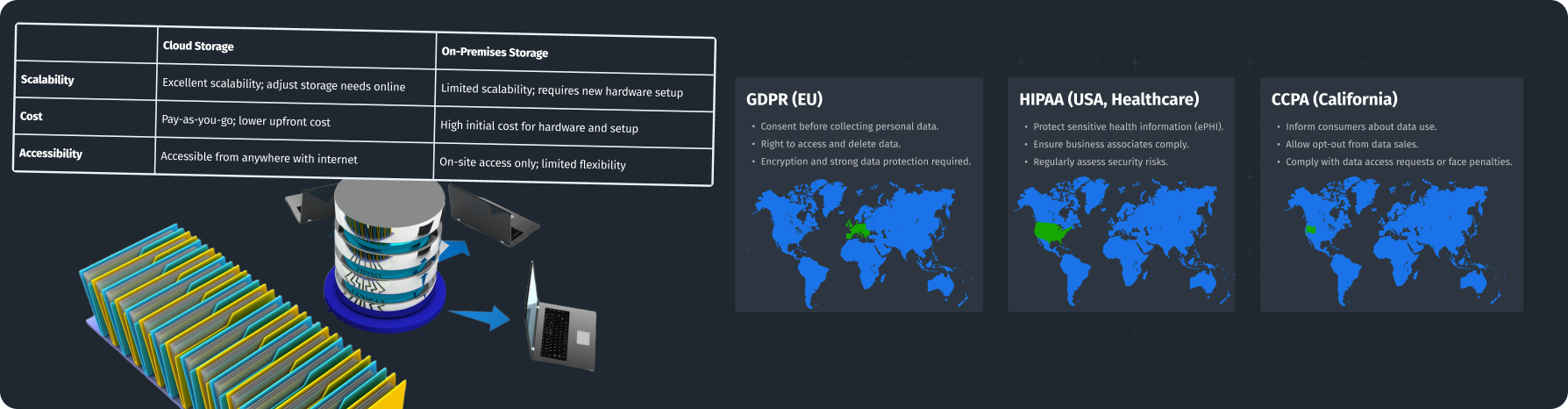

Tools that automate machine learning make it easier to select and tune models, saving time on preprocessing and choosing algorithms. - Cloud Computing:

Platforms like AWS and Google Cloud provide scalable spaces for data storage and calculation. They make handling large data easier without needing expensive hardware, consistent with data science best practices.

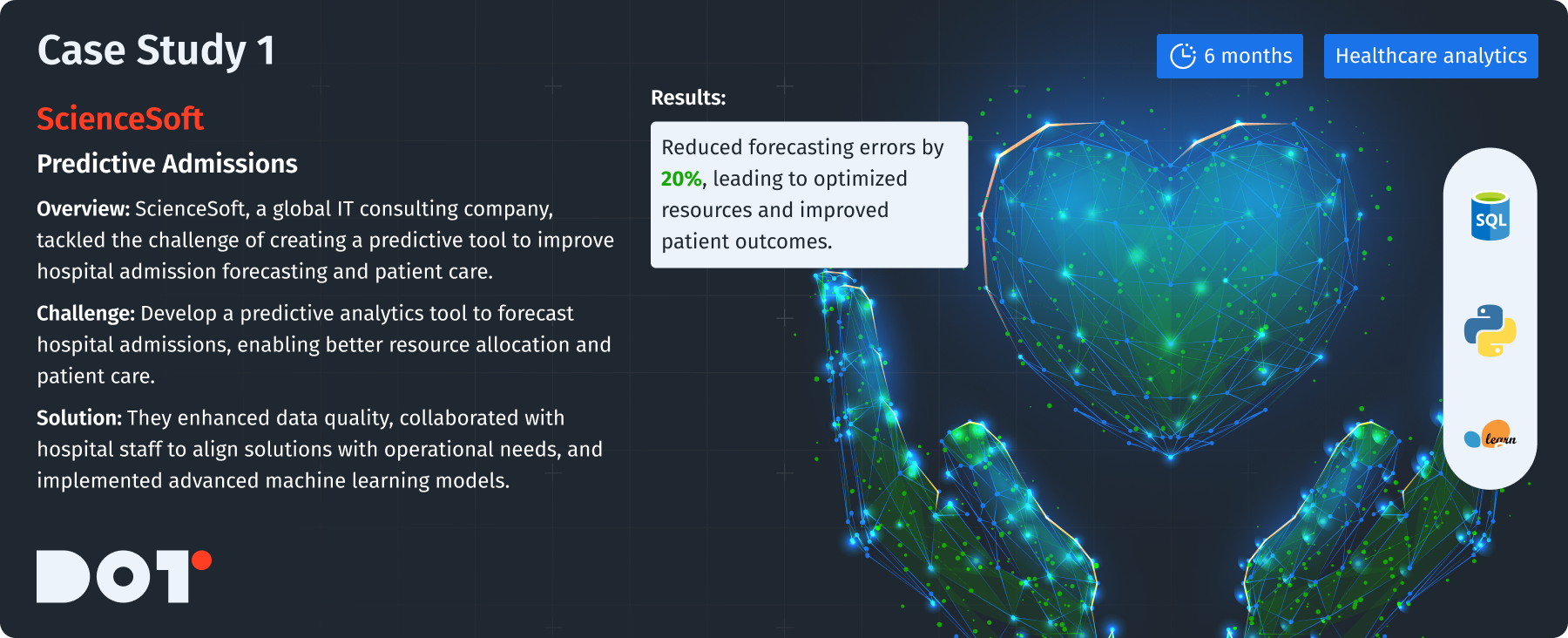

Case Study: ScienceSoft

Introduction to ScienceSoft

ScienceSoft is a company that offers IT consulting and software development. They use best practices for solutions in many fields, especially healthcare.

Challenge

They needed to create a predictive tool to improve patient care by forecasting hospital admissions accurately.

Solution Description

They realized they needed to follow best practices to improve data quality and modeling while ensuring adherence to data science best practices.

What They Did

- Worked with hospital staff to understand their needs, keeping the data solution aligned with operations.

- Set clear success metrics related to patient outcomes.

How They Did It

- Conducted data profiling to check data quality and used historical records for analysis.

- Applied machine learning algorithms, tuning them through strict validation for the best results.

Team Composition

The team included Data Scientists, Healthcare Consultants, and Software Engineers. They worked together to solve problems.

Project Duration

The project took six months from start to finish.

Technologies Used

- Python for data analysis.

- Scikit-learn for using various machine learning algorithms.

- SQL for database management.

Results

- Reduced patient admission forecasting errors by 20%.

- Improved resource allocation, leading to better patient care.

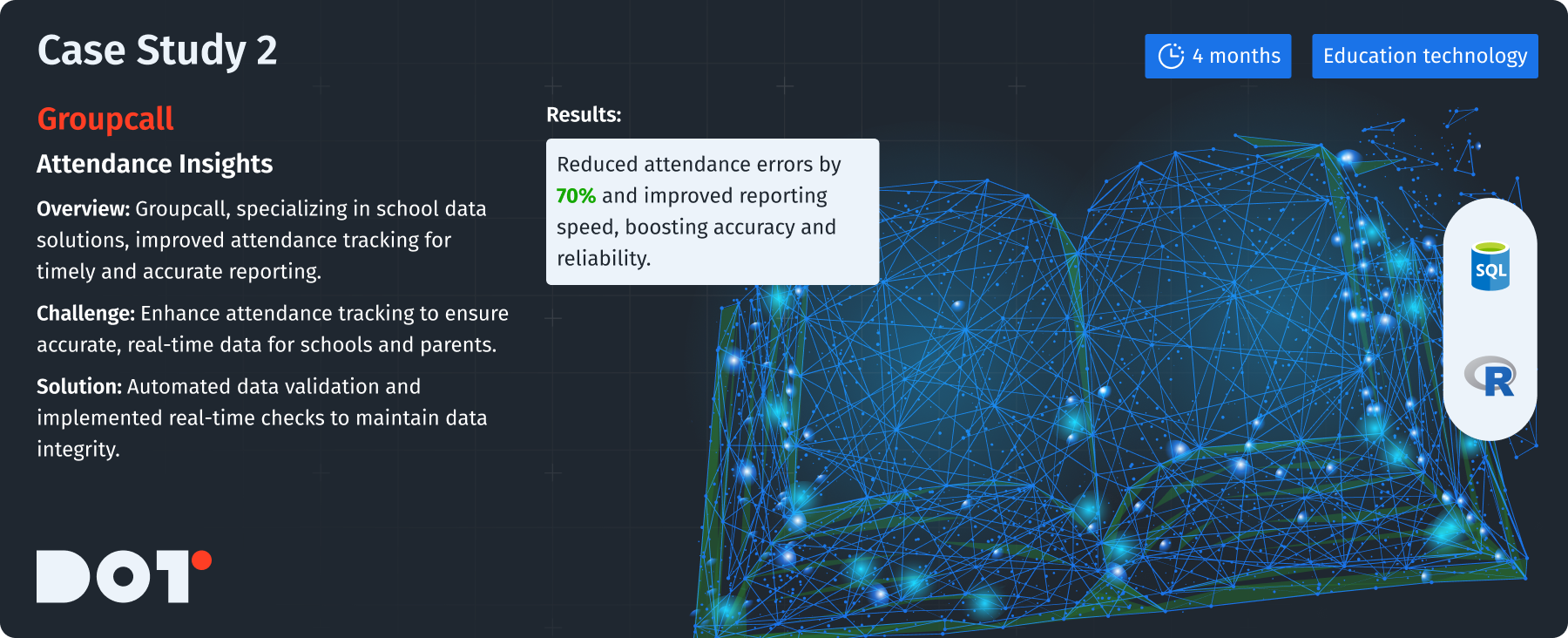

Case Study: Groupcall

Introduction to Groupcall

Groupcall offers innovative communication and data solutions for schools. They focus on improving parental engagement and tracking attendance.

Challenge

They wanted to better track attendance to provide timely and reliable information to schools and parents.

Solution Description

They aimed for strong data integrity by improving data collection processes.

What They Did

- Created automated systems to check attendance data in real-time, improving accuracy.

- Worked with schools to understand their data collection methods.

How They Did It

- Applied strict data validation rules at the point of entry using SQL, stopping inaccuracies from piling up.

- Conducted regular audits of attendance data to keep it reliable.

Team Composition

The Groupcall team had Data Analysts, Software Developers, and Educational Consultants, all contributing their special skills.

Project Duration

The project was completed in four months, showing how effective their process was.

Technologies Used

- SQL for managing databases efficiently.

- R for statistical analysis and visualizing attendance data.

Results

- Enhanced attendance record accuracy by over 30%.

- Strengthened parental engagement due to timely updates and better data visibility.

Checklist for Data Science Best Practices

Before starting your data science project, check off these important steps:

Identify clear project goals and ensure they align with business objectives.

Validate success metrics against established KPIs while ensuring clarity.

Conduct thorough checks for data quality, assessing missing data and duplicates.

Select appropriate algorithms and rigorously validate their effectiveness using model evaluation metrics.

Effectively communicate insights gained to key stakeholders.

Do you fully understand the topic? If not, you can book a free 15-minute consultation with an expert from Dot Analytics.

Summary

This overview of data science best practices highlights their importance in making data-driven projects successful. By understanding project aims, measuring success well, ensuring data quality, and sharing insights effectively, data professionals can wield the data’s power.

Using the right tools and strategies is crucial for achieving real outcomes. The real-world examples from ScienceSoft and Groupcall show how following best practices can lead to better performance.

If this topic is top of mind for you, book a free 20-minute consultation with a Dot Analytics expert. We are eager to assist you in navigating the landscape of data science and achieving your project goals effectively.

Leave a Reply