What is Data Analytics Integration and Why It Matters

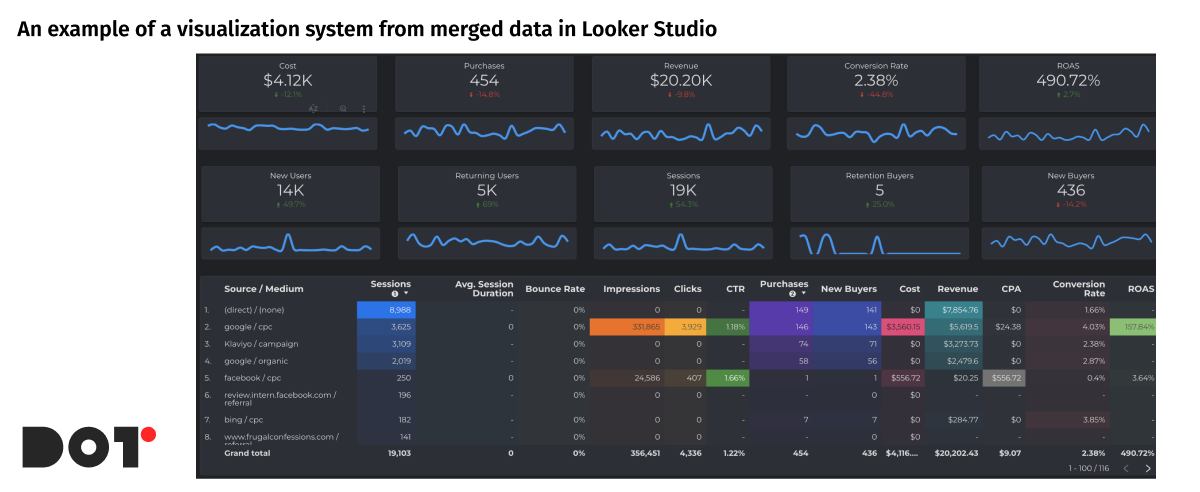

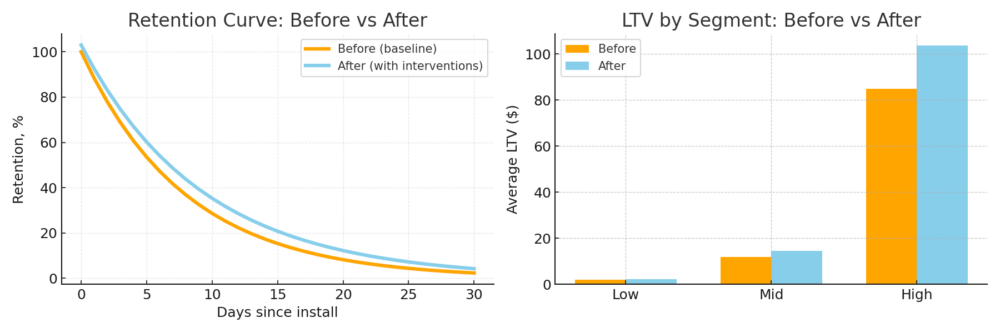

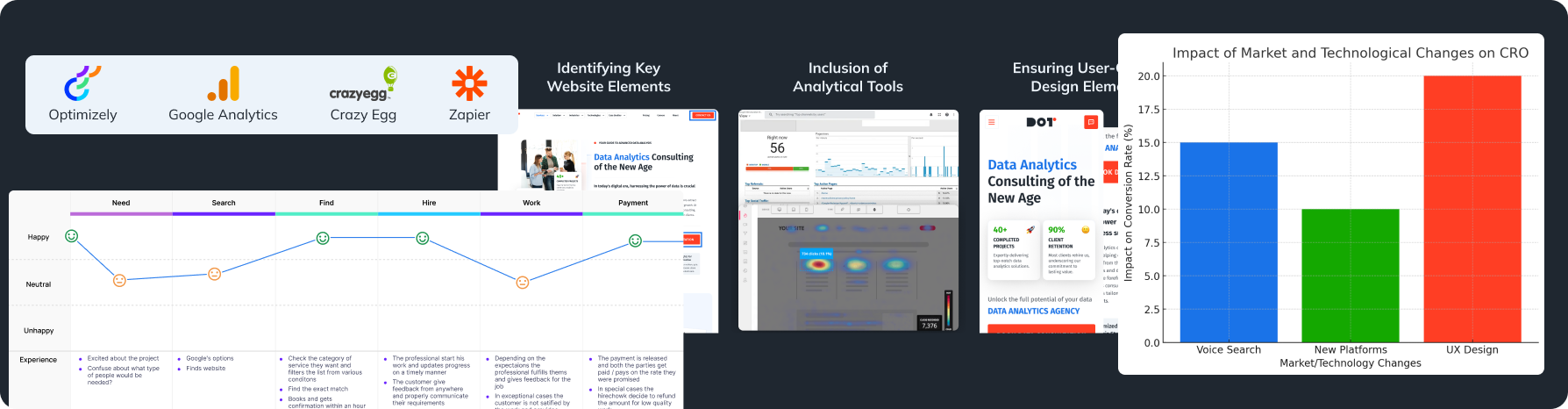

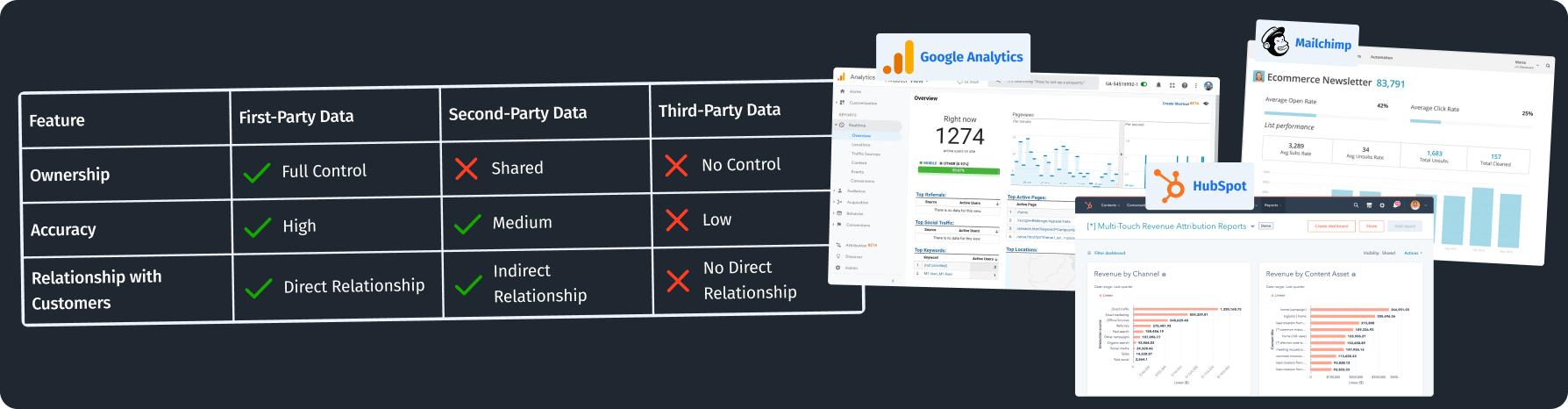

Data integration is the process of combining data from different sources into one place, such as a visualization system. It involves gathering data from CRM, advertising platforms, and other business tools to unify and use them as a homogeneous data set.

This unification allows for the seamless use of data in contexts necessary for the business. For instance, marketers don’t need to spend time consolidating data from Google Ads, Meta Ads, Google Analytics, CRM, Pinterest Ads, and other platforms into one place. Thus, data integration and analytics relieve employees from manual data consolidation, allowing them to focus on data integration analytics and decision-making.

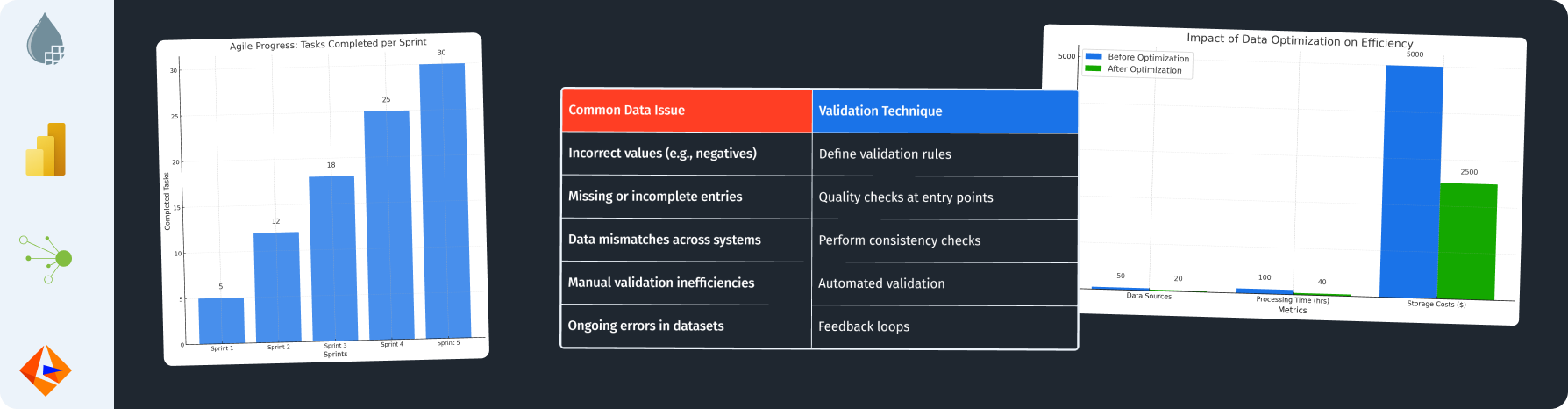

The data integration process usually involves several key steps:

- Data Collection: Gathering data from various sources.

- Data Cleaning: Removing duplicates, correcting errors, and standardizing data formats.

- Data Transformation: Converting data according to the system requirements where it will be loaded.

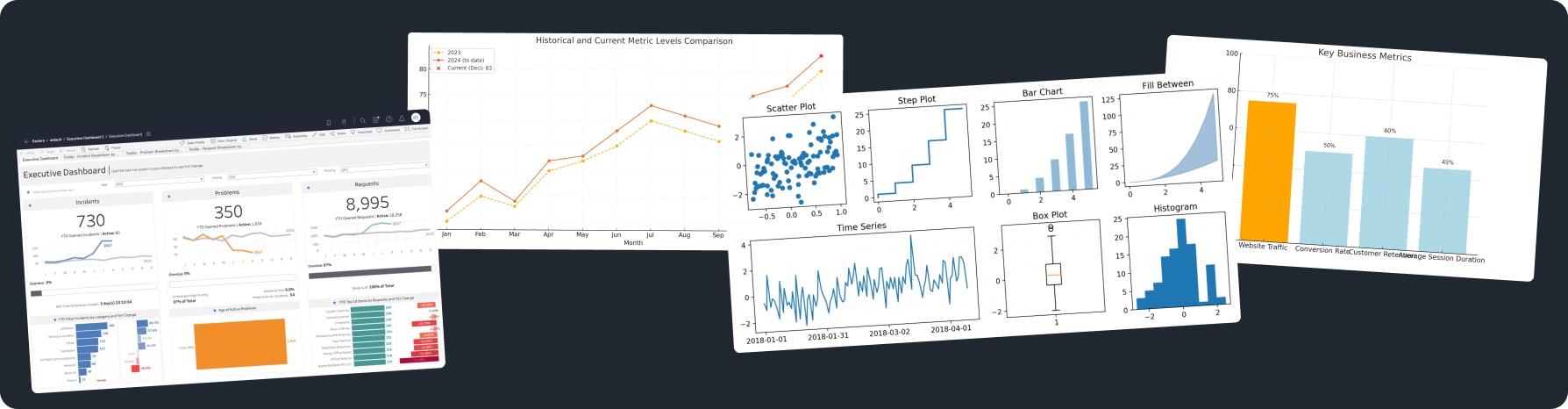

- Data Loading: Loading data into a central repository or visualization system. Here, data is organized for easy analysis. Modern visualization systems like Tableau, Power BI, or Google Data Studio enable creating interactive reports and dashboards, aiding in the visualization and analysis of integrated data.

Data integration provides companies with a comprehensive view of their business processes, enabling them to analyze the effectiveness of marketing campaigns, track customer behavior, and make informed decisions based on comprehensive information. It also enhances forecast accuracy, optimizes business processes, and improves customer interactions.

Explaining Data Analytics Integration Processes

Integration Techniques

Data integration is a key element for effective business management, especially when it comes to analysis and decision-making. Let’s explore various data integration methods, such as ETL processes, API integration, and real-time data streaming, with specific examples.

First Step: Data Collection from Various Sources

Imagine we need to simplify a marketer’s work with reporting, data, and ad performance to speed up decision-making. Here’s how it can be done using different data integration methods.

Native Connectors

For example, data from Google Ads can be collected using a native connector from Google Ads to BigQuery. This greatly simplifies maintenance and ensures automatic data flow without additional setup. No coding is required, as everything is configured through the interface.

API Integration

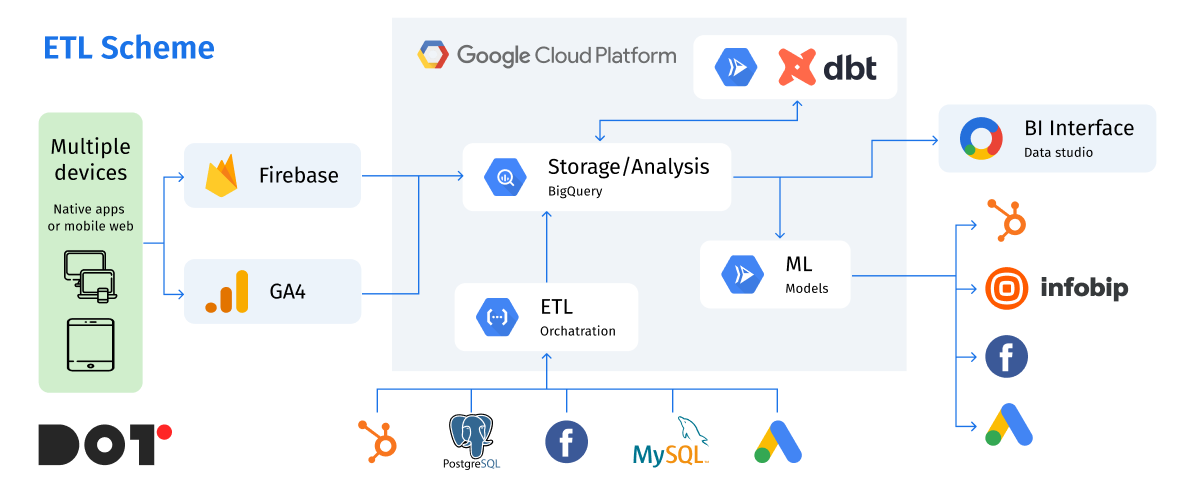

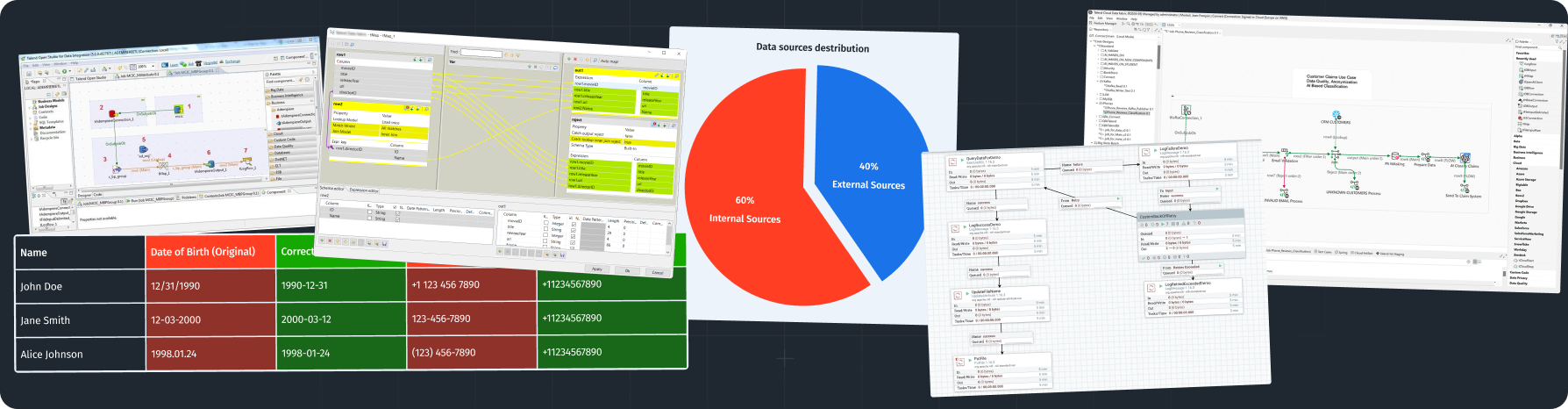

For other services, such as Meta Ads or other advertising tools that do not have direct connectors to your data warehouse (DWH), API integration is used. This method is more time-consuming but allows you to collect exactly the data you need. For instance, you can gather data on clicks, impressions, and conversions, perform basic transformation and cleaning before loading. This is called ETL (Extract, Transform, Load).

ETL Implementation

The ETL process allows data collection using a programming language, such as Python. You can write a script that extracts data from the API, processes it (e.g., transforms date formats, cleans unnecessary characters), and loads it into the data warehouse. This can be useful when specific data transformations or merging information from different sources is needed.

Real-Time Data Streaming

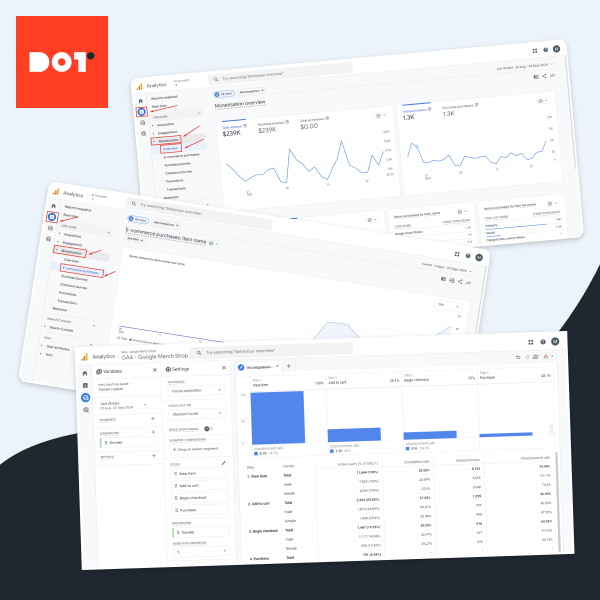

Another type of data collection is real-time streaming. For instance, Google Analytics 4 has an intraday table that collects information in real time and transmits it to the DWH. This method is suitable for applications where up-to-date data is crucial, such as tracking user activity on a website or in a mobile app.

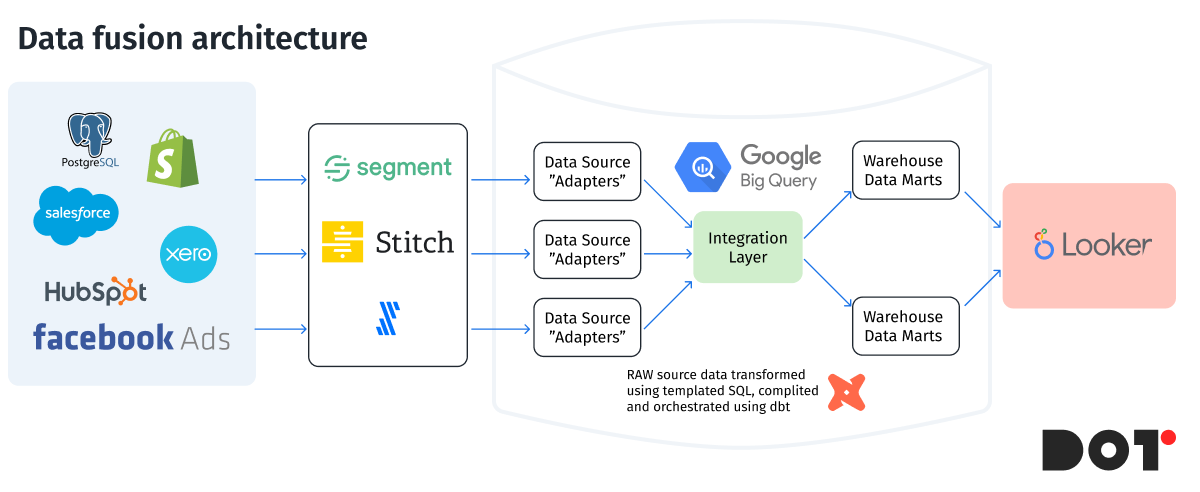

Using ETL Services

There are also ETL services that use their own scripts to collect data and transfer it to your DWH, such as BigQuery or Amazon Redshift. For example, platforms like Fivetran or Stitch can automate the integration process, reducing the need for manual coding and allowing quicker implementation of solutions.

By understanding and utilizing these data integration techniques, businesses can streamline their data management processes, enabling more efficient analysis and decision-making. This not only saves time but also enhances the accuracy and reliability of the insights derived from the data.

Real-Life Examples

Small Business

- Platform: Fivetran

- Situation: A small business wants to integrate data from a website form and CRM. Fivetran automates data transfer without the need for coding.

Medium Business

- Platform: Google Data Studio

- Situation: A medium-sized company collects data from Google Ads, Meta Ads, and its website. Using Data Studio, they can create reports and dashboards for the marketing team.

Large Business

- Platform: Apache NiFi

- Situation: A large corporation integrates data from various departments and external partners, processing large volumes of data in real-time for analytical purposes.

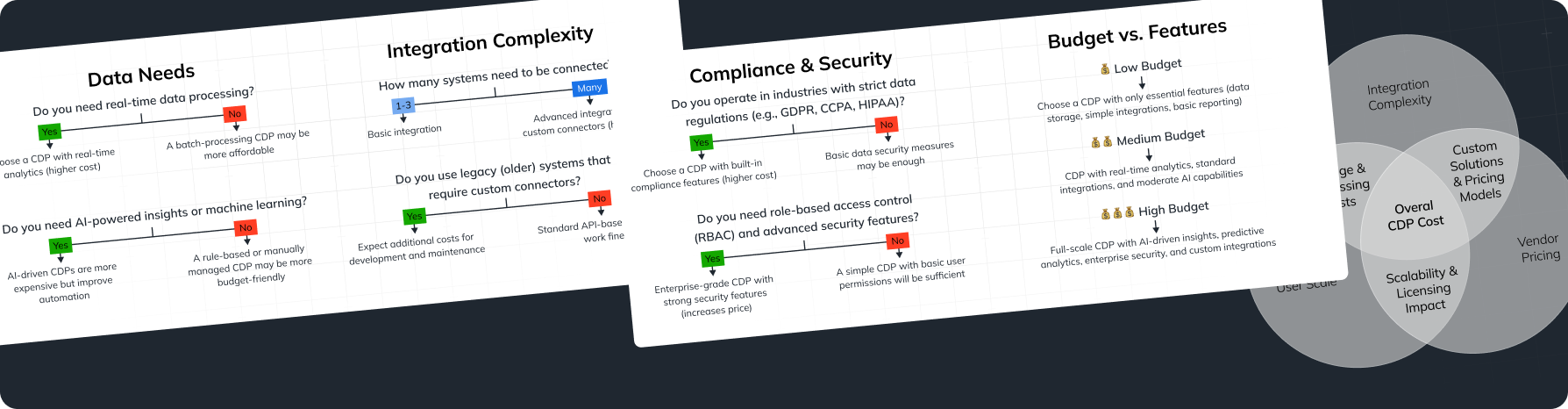

Factors Affecting Data Integration Complexity and Cost

Data integration is a crucial process for many organizations, but its complexity and cost can vary significantly depending on several key factors. Let’s examine the main ones.

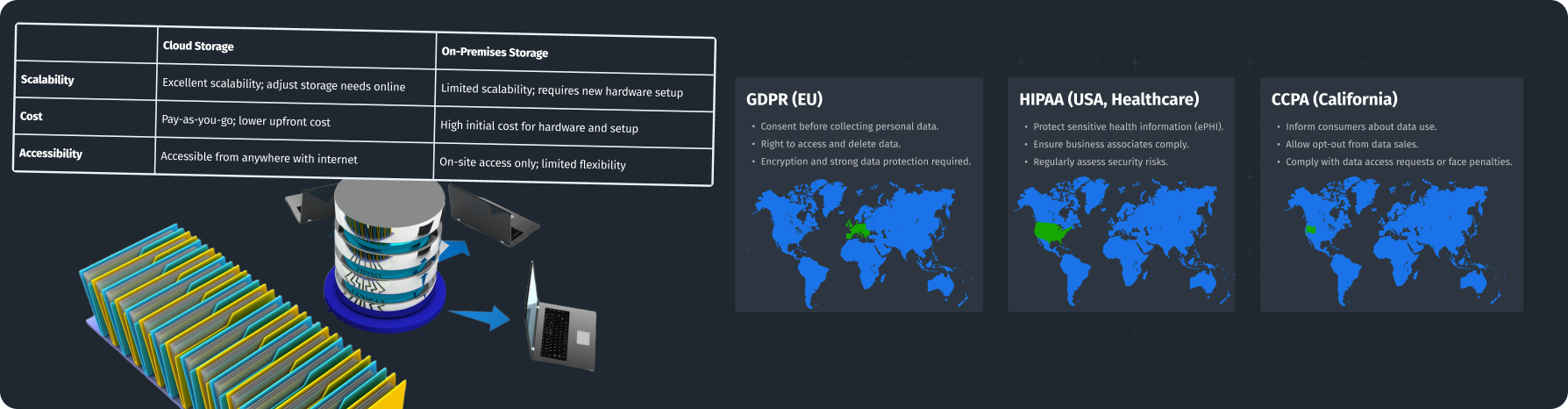

Volume and Variety of Data

The volume and variety of data greatly impact integration strategies and costs. The more data that needs to be processed, the more resources are required for storage, processing, and transfer. For instance, large data volumes require powerful servers and complex systems for processing, increasing the costs of storing and managing such data.

Data variety also adds complexity since data can come in different formats. Integrating various types of data requires different methods and tools for transforming and combining them into a unified system. This necessitates additional expenses for developing and maintaining the necessary tools.

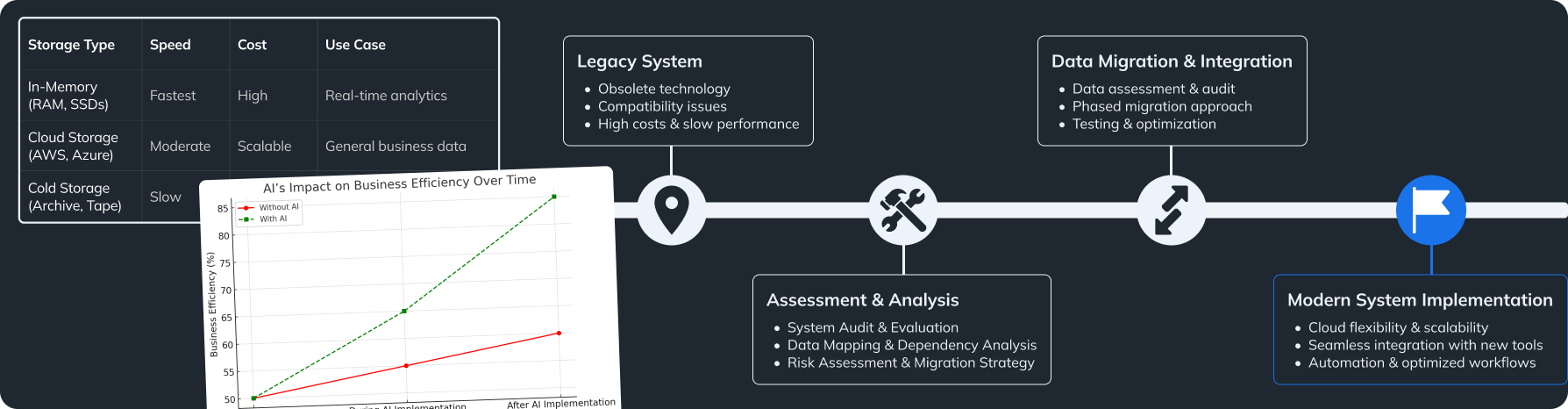

Variability of Data Sources

Integrating data from different sources can be very challenging, especially when dealing with legacy systems. Older systems often lack modern APIs or standard data formats, complicating the process of extracting and processing data. Such systems may require the development of special connectors or the use of intermediary software for integration, raising the overall cost.

On the other hand, modern applications typically have well-documented APIs and support standard data exchange protocols, simplifying the integration process significantly. However, even in these cases, ensuring compatibility and optimizing the data processing workflow from various sources can present challenges.

By understanding these factors, organizations can better plan and manage their data integration projects, balancing complexity and cost effectively.

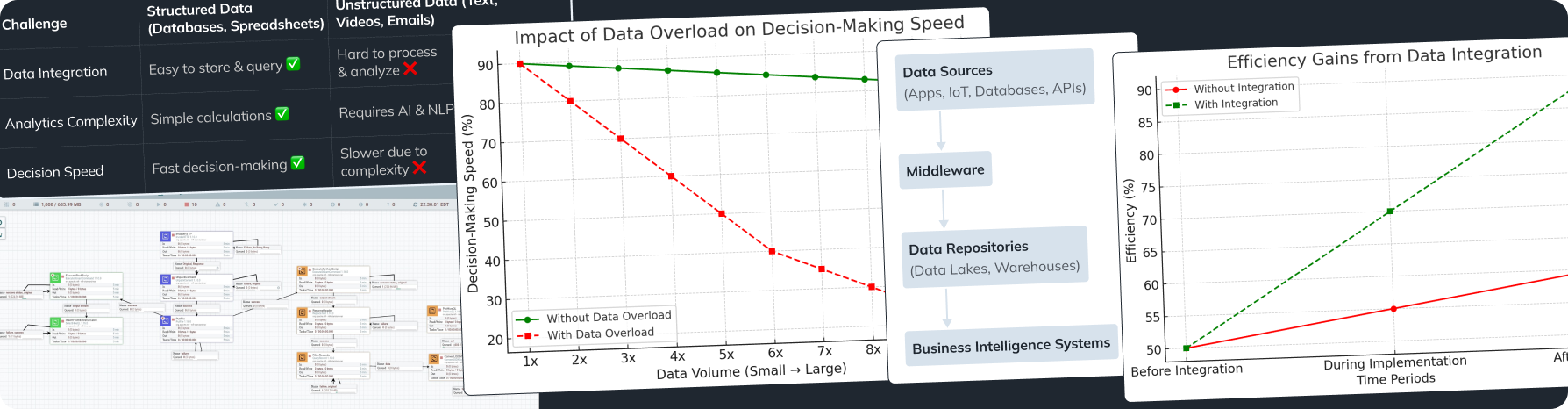

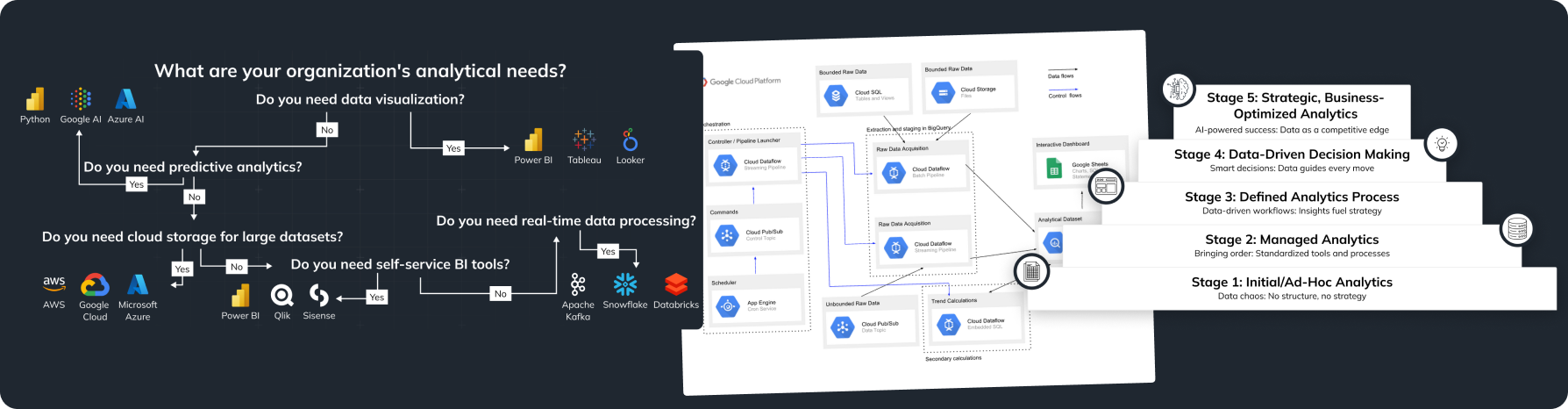

Current Trends and Technologies in Data Integration

Data Integration is constantly evolving, incorporating new technologies and approaches to make the process more convenient and efficient. In recent years, major innovations have included cloud integration platforms and AI-powered tools.

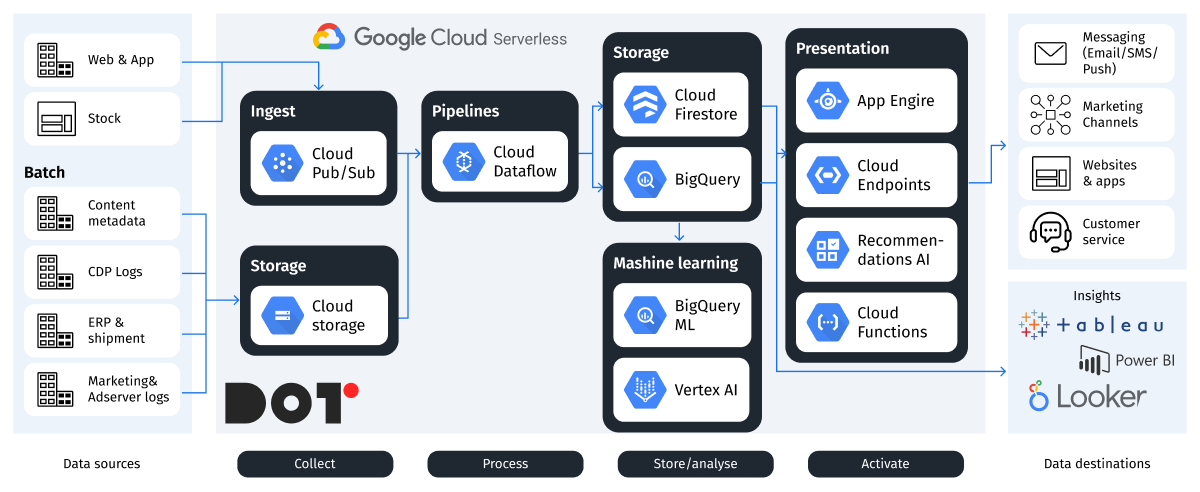

Cloud Integration Platforms

Cloud integration platforms, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure, are gaining popularity due to their flexibility, scalability, and cost-effectiveness. These platforms offer integration services that allow connecting various data sources, automating ETL processes, and ensuring seamless real-time data flow. For example, AWS Glue and Google Cloud Dataflow facilitate data integration by automating many steps of the process.

AI-Powered Tools

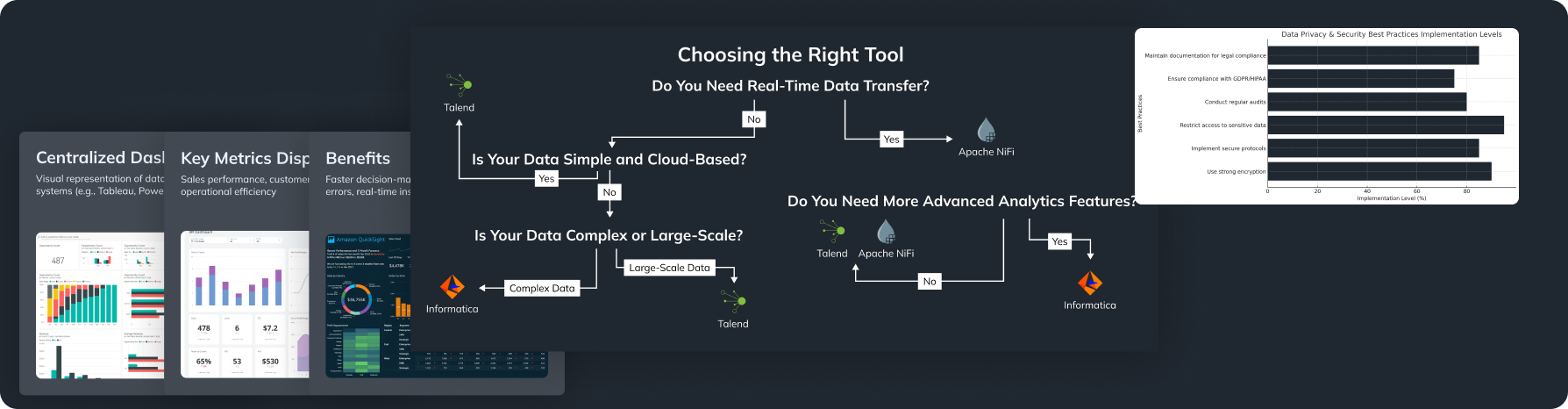

Artificial intelligence is starting to play a significant role in data integration. AI-powered tools can automatically detect, correct, and transform data, significantly reducing the time and effort required for integration. Platforms like Informatica and Talend incorporate AI to improve data quality, automatically detect anomalies, and optimize data transformation processes. They can also predict data needs and automate solutions, enhancing work efficiency.

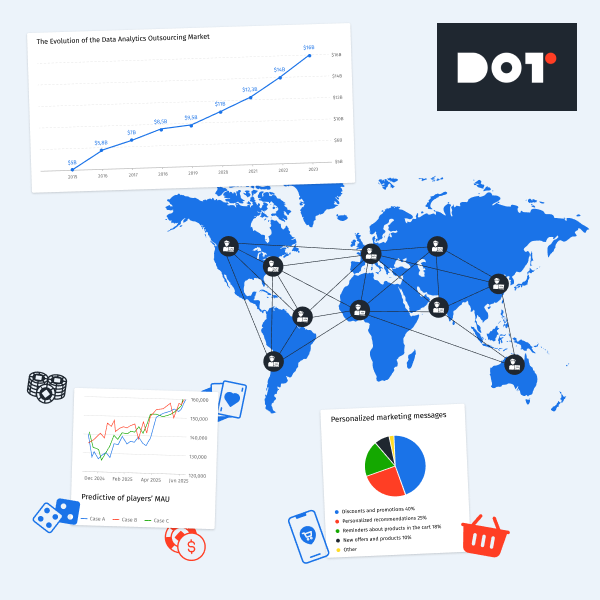

Market Analysis

Trends in the adoption of data integration technologies indicate a rapid growth in the popularity of cloud platforms and AI solutions. More companies are switching to cloud data services due to their flexibility and scalability. According to research, the cloud integration platform market is expected to grow significantly in the next few years, with a projected annual growth rate of over 20%.

AI-powered tools are also showing rapid growth as companies increasingly recognize their value in improving data quality and automating complex processes. By 2025, more than 60% of large companies are expected to use AI for data integration and analytics.

Future Predictions

In the future, data integration will be even more closely linked with innovations in cloud computing and AI. The demand for solutions providing real-time data integration, such as streaming platforms (e.g., Apache Kafka) and tools for working with Big Data, is expected to grow.

Additionally, further developments in self-learning data integration systems are anticipated. This means that integration platforms will become more autonomous, capable of adapting to changes in data sources and business requirements without manual intervention.

By keeping up with these trends and technologies, businesses can stay ahead in data management, ensuring efficient and effective integration processes that support their analytical and decision-making needs. Utilizing APIs in data integration will further streamline the process, enhancing overall efficiency.

Strategies for Efficient Data Integration

Cost-Effective Integration Practices

For modern companies involved in marketing, E-commerce, and SAAS, integrating data from various sources like Google Ads, Meta Ads, CRM systems, and others into a single place is crucial. Utilizing tools like dbt (Data Build Tool) and Google BigQuery or other data warehouses (DWH) can reduce costs and enhance data processing accuracy.

Using dbt and Google BigQuery

dbt and Google BigQuery are powerful tools for processing and storing large volumes of data. dbt allows transforming incoming data from external sources into an analysis-friendly format. Using BigQuery for data streaming ensures fast and scalable data storage.

Data Quality Verification

dbt has a built-in data quality check mechanism that simplifies the processing and loading of data, saving money by processing only trusted data. Through table orchestration in dbt, companies can avoid errors that occur during partial data loads. For example, if one table loads successfully while another does not, this previously caused data errors. Now, dbt prevents such issues.

Combining Data from Various Sources

Combining data from Google Ads, Meta Ads, and CRM into BigQuery creates a centralized data repository. This simplifies the analysis process and enables more accurate and comprehensive results. With dbt, the data loading and transformation process can be automated, reducing the need for manual labor and minimizing the likelihood of errors.

Table Partitioning to Save on Processing Costs

Table partitioning in BigQuery is a key feature for optimizing costs and improving data processing efficiency. Partitioning allows breaking down large tables into smaller parts, significantly reducing query time and processing costs. This is particularly useful for large volumes of data coming from various advertising platforms and CRM systems.

By implementing these strategies, companies can achieve efficient and cost-effective data integration, leading to better decision-making and streamlined business processes.

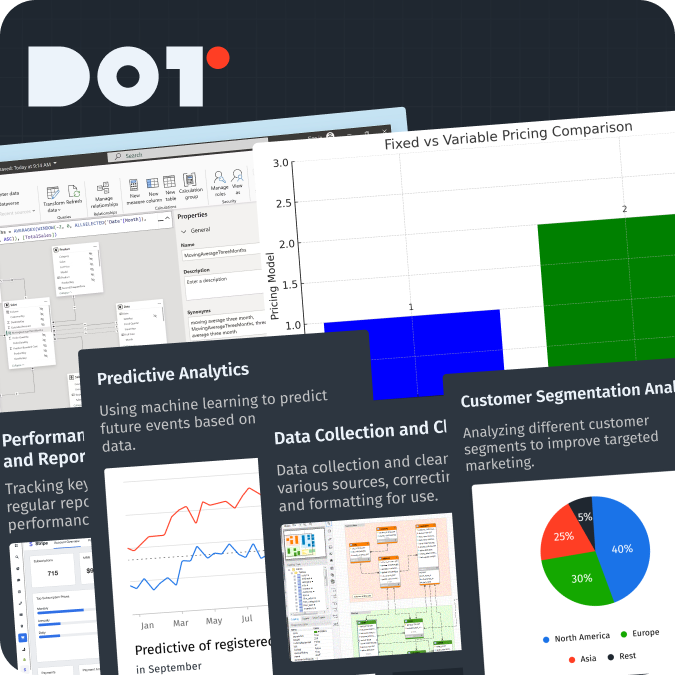

Our Approach to Data Analytics Integration

Utilizing the Full Functionality of dbt

Our approach is based on leveraging the full capabilities of dbt. dbt macros allow creating a scalable and simple naming system, enabling dataset creation and data recording from the console. This provides flexibility and efficiency in data management, simplifying processes, enhancing data synchronization, and reducing the likelihood of errors.

Using Tags

In our structure, some tables need more frequent updates than others. We use dbt tags to process certain tables separately, which significantly saves costs by applying frequent updates only to necessary tables, reducing overall system load.

Incremental Tables

We actively use partitioned tables and incremental models to save costs and improve efficiency. Partitioned tables break down large data volumes into smaller parts, reducing query processing time and costs. Incremental models work with only new data, adding unique values daily, optimizing processing and storage.

Data Quality Testing

Our dbt tests include checks for data uniqueness and column data type changes. For instance, if `transaction_id` changes from `int64` to `string`, our dbt tests will detect this change and prevent data contamination in the DWH. In case of such changes, the system issues an error for immediate action, ensuring high data quality, reliability, and data governance.

Combining Data from Various Sources

Combining data from Google Ads, Meta Ads, and CRM into BigQuery creates a centralized data repository. This simplifies analysis and allows for more accurate and comprehensive results. Automating data loading and transformation with dbt reduces manual labor and minimizes errors.

Cost Savings with Table Partitioning

Table partitioning in BigQuery is key to optimizing costs and improving data processing efficiency. Partitioning allows breaking down large tables into smaller parts, significantly reducing query time and processing costs. This is particularly useful for large data volumes from various advertising platforms and CRM.

Using Google Cloud

We use Google Cloud Platform to save costs and efficiently utilize available resources. Architecturally, some tools are natively connected to others, saving on connectors and minimizing costs. For example, we use BigQuery and native connectors for Google Ads and GA4, reducing data streaming costs to the DWH. BigQuery also has a native connector to Looker Studio, making data visualization easy and cost-effective.

Summary

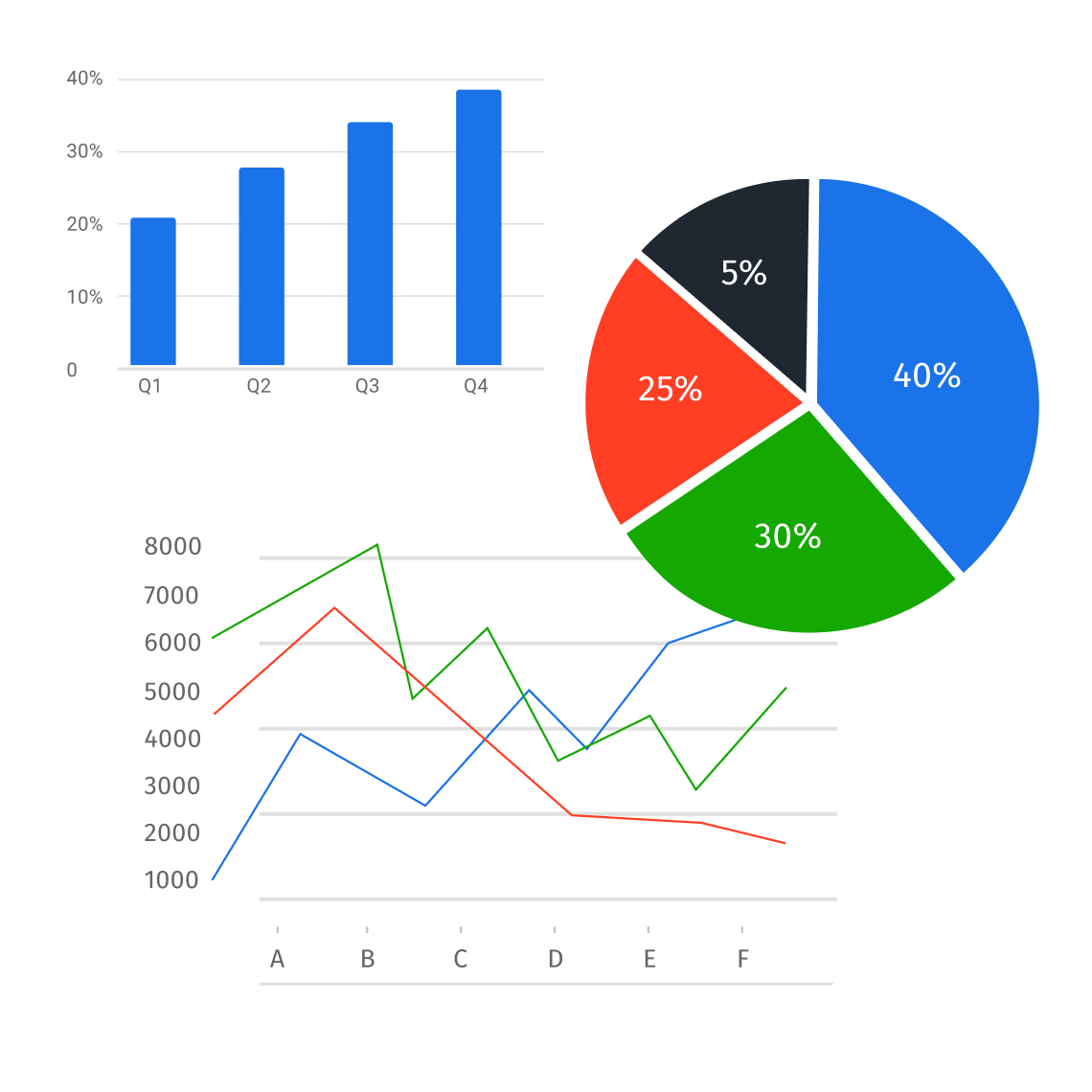

Using dbt and BigQuery for data integration and analytics from Google Ads, Meta Ads, and CRM reduces data processing costs by 40%. Built-in data quality verification mechanisms in dbt and partitioned tables in BigQuery ensure efficient and economical processing of large data volumes, making our data integration approach highly effective. Our approach relies on advanced technologies and best practices to ensure high data quality and cost optimization, enabling our clients to receive accurate and reliable results for informed business decisions.

If you need help with data integration and analytics, contact the Dot Analytics team today to learn how we can support your business.

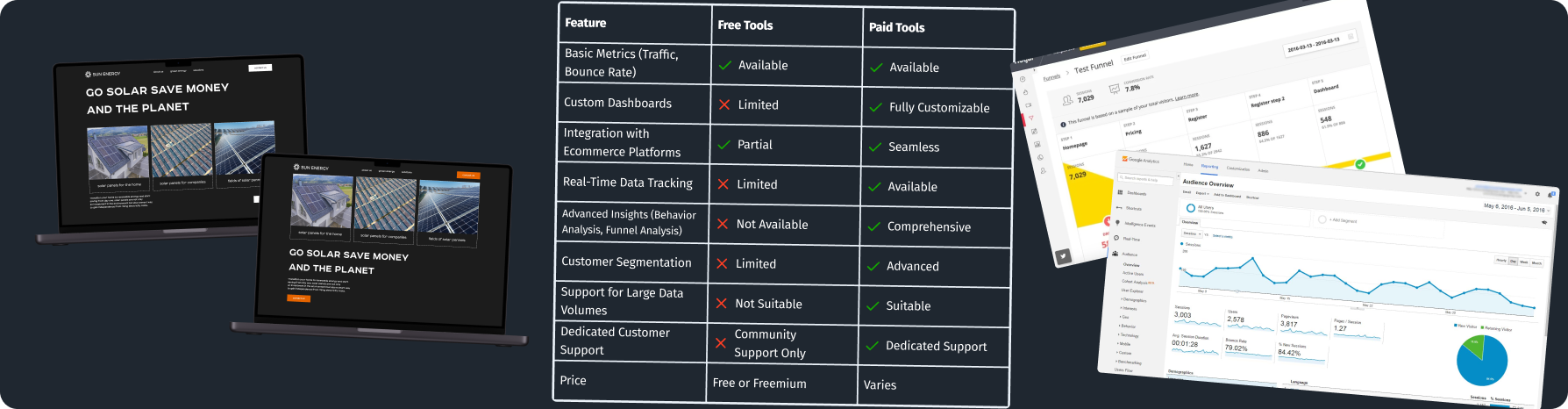

Choosing the Right Data Integration Strategy for Your Business

Firstly, the size of the business plays a crucial role. Small and medium-sized enterprises (SMEs) may need simpler and less costly solutions, while large companies with large data volumes will require more powerful and scalable tools.

For small businesses, where time and basic functionality are more important, focusing on simple, easy-to-integrate products is beneficial. For instance, Google allows nearly free integration of DWH (BigQuery) with APIs (Google Analytics 4, Google Ads, Meta Ads) and other services natively and at no cost. This enables businesses to quickly meet basic data integration needs and simplify decision-making processes.

For medium and large businesses, such an approach might be overly simplistic, where the cost of error is high, and additional tools are needed to make the project structure more complex but more secure. This reduces or prevents the cost of errors.

Using AI systems for anomaly detection and notification, table orchestrators with test systems, notifications, and additional features allow for better tool integration, providing a more stable data integration, cleaning, and visualization system. In the long term, this offers better growth metrics for companies.

Conclusions

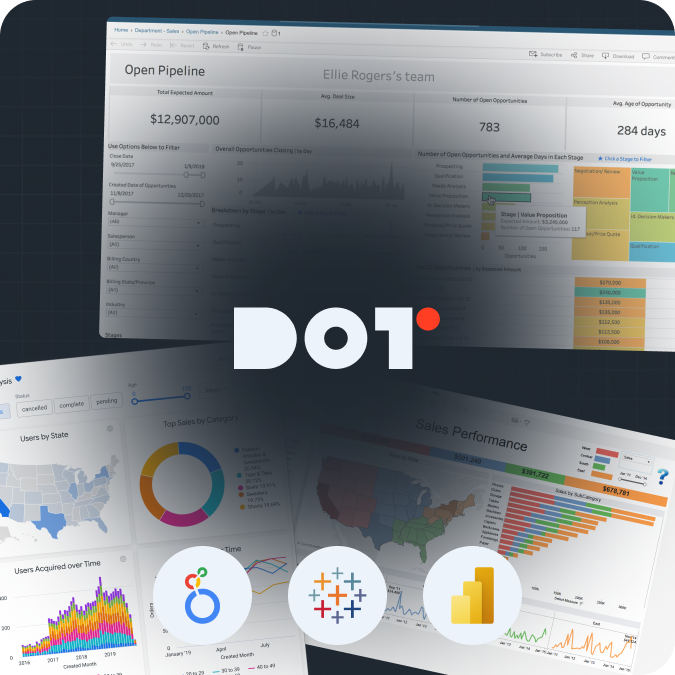

Data integration is a critical process for modern companies aiming to gain a comprehensive view of their business processes and make informed decisions. Utilizing tools like dbt and Google BigQuery ensures efficient data processing from various sources, such as Google Ads, Meta Ads, CRM systems, call tracking, and more. This automation reduces costs and enhances analytical accuracy.

At Dot Analytics, we employ advanced technologies and best practices for data integration, enabling our clients to achieve significant success in their respective fields. Our focus is on cost optimization, ensuring high data quality, and creating stable systems for analytics.

We invite you to take advantage of our services and reach new heights in your business. Contact us to learn more about our solutions and how we can help you achieve your goals. Together, we can make your business more efficient and successful.

Reach out to the Dot Analytics team today to start your journey towards better data integration and analytics!

Leave a Reply